This article is more than 1 year old

Google's video recognition AI is trivially trollable

One false frame in 50 and TensorFlow sees whatever you want it to

Early in March, Google let loose a beta previewing an AI to classify videos – and it only took a month for University of Washington boffins to defeat it.

The academics' approach is trivial: all the researchers (led by PhD student Hossein Hosseini) did was inserted a still photo “periodically and at a very low rate into videos.”

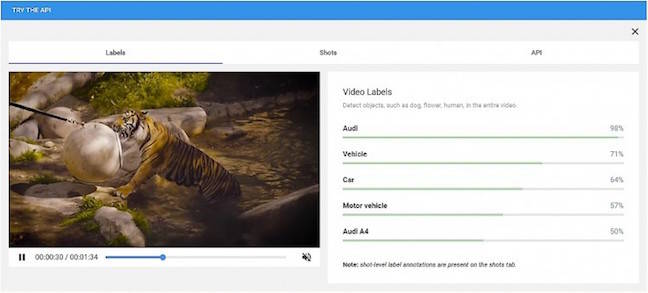

Hence while the video might show a tiger, when they stuck a photo of a car in the video, the system decided “the video was about an Audi”, the university's announcement says.

Google's announcement of the beta explained that the deep-learning classifier is “built using frameworks like TensorFlow and applied on large-scale media platforms like YouTube”.

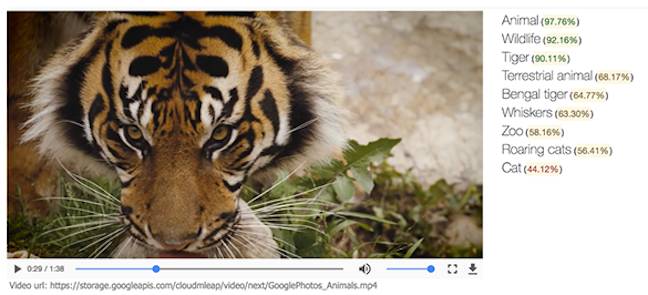

Google correctly identifying its demonstration video

In their research paper (at arXiv), the boffins explain that the manipulation could pass unnoticed by a casual viewer of the video. They took Google's demonstration video, and replaced one frame every two seconds (that is, one frame in 50, at a 25 fps frame rate) with the Audi.

What happens when you troll the AI

The work wasn't only for the laughs, the researchers write.

AI demonstrations are nearly always in a safe environment, they note: “Machine learning systems are typically designed and developed with the implicit assumption that they will be deployed in benign settings. However, many works have pointed out their vulnerability in adversarial environments”.

As another example, using linguistic AI to spot trolls is easily defeated by typos, deliberate misspellings of offensive words, or bad punctuation.

As the university's announcement points out, law enforcement agencies have a lot of faith that AI will help them tag suspects in surveillance videos.

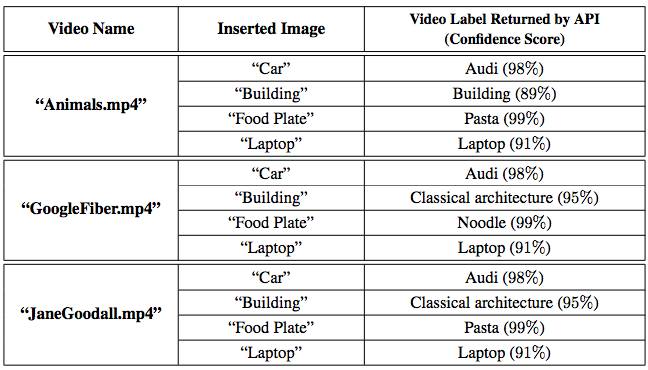

It's also interesting to note that Google's AI showed remarkable confidence in its incorrect identifications, as is seen in the table below, with the Audi inserted into the Chocolate Factory's test videos of animals, Google Fibre, and legendary Gorilla researcher Jane Goodall.

All the confidence of the mediocre: Google's AI complimenting itself on its performance

Best of all: the researchers didn't have to learn how the video classifier's AI worked to deceive it.

“Note that we could deceive the Google’s Cloud Video Intelligence API, without having any knowledge about the learning algorithms, video annotation algorithms or the cloud computing architecture used by the API”, they write, “we developed an approach for deceiving the API, by only querying the system with different inputs”. ®