This article is more than 1 year old

Google's cloudy image recognition is easily blinded, say boffins

Hooray for humans! We can pick out images too obscure for Google's AI

Google's Cloud Vision API is easily blinded by the addition of a little noise to the images it analyses, say a trio of researchers from the Network Security Lab at the University of Washington, Seattle.

Authors Hossein Hosseini, Baicen Xiao and Radha Poovendran have hit arXiv with a pre-press paper titled Google’s Cloud Vision API Is Not Robust To Noise (PDF) that says “In essence, we found that by adding noise, we can always force the API to output wrong labels or to fail to detect any face or text within the image.”

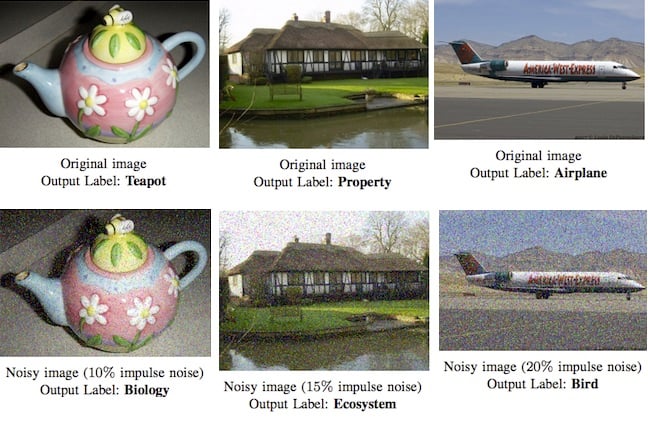

The authors explain that if one can add different types of noise to an image, the Cloud Vision API will always incorrectly analyse the pictures presented to it. The image at the top of this story (or here for m.reg readers) shows the false results the API returned. It doesn;t need to be a lot of noise: the authors found an average of 14.25 per cent "impulse noise" got the job done.

Google's Cloud Vision API getting stuff wrong once noise is added

At this point readers may ask why this matters: the authors suggest that deliberately adding noise to images could be an attack vector because “an adversary can easily bypass an image filtering system, by adding noise to an image with inappropriate content.”

Which sounds interesting because bad actors could easily learn that images are subject to machine analysis. For example, The Register recently learned of a drone designed to photograph supermarket shelves so that image analysis can automatically figure out what stock needs to be re-ordered. An attack on such a trove of images that results in empty shelves could see customers decide to shop elsewhere.

And let's not even start to think what would happen if photos of wanted criminals were corrupted so that known villains could walk in front of CCTV cameras with impunity.

The researchers also strike a small blow for humanity, because they found images that fool Google remain easily-recognised by real, live, flesh-and-blood people.

In related news, Google made its Cloud Speech API and Automatic Speech Recognition service generally available. Here's hoping it kant bee fuelled buy ad-ding some noize to speetch. ®