This article is more than 1 year old

A future of AI-generated fake news photos, hands off machine-learning boffins – and more

Plus: Folks freak out searching for 'brassiere' on their iPhones

Good morning, or afternoon, wherever you are. Here's a roundup of recent AI developments on top of everything else we've reported over the past week or so.

Real or fake? Researchers at Nvidia have developed and described a new way to train generative adversarial networks (GANs) in a more stable manner to generate a series of, what appears at first glance, seemingly realistic convincing photos. In other words, this is a neural network that can produce, at a decent resolution, fairly plausible photos of things – from couches to buildings – on demand from scratch. The computer can invent or fabricate scenes for you or anyone else, from a description: pretty much on-demand fake news.

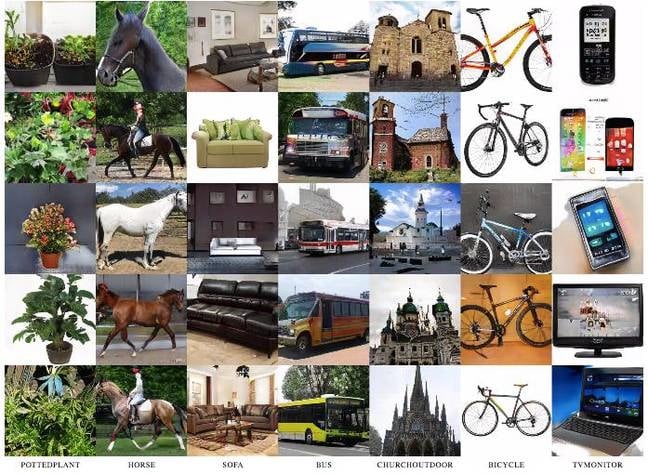

Some of the different types of images generated by Nvidia's software ... Click to enlarge. Image credit: Karras et al.

However, don't panic just yet. If you zoom in and take a closer look you’ll see it’s not all perfect. The brown glossy coat of the horse images are pretty impressive, but there is a horse clearly with two heads. The screens in the tech-related images are all the right size and shape, but the displays look like watercolor blotches, and in the laptop picture, the keyboard appears to be melting. Bicycle wheel spokes look like a toddler scrawled them. But the system does seem to cope very well with producing images of sofas, bikes, and buses for some reason. And this is early research, after all.

GANs are notoriously difficult to train and point in a direction that makes them useful, so it’ll be interesting to find out how Nvidia's boffins improved the process, which we'll dive into for a more in-depth article this week.

Cloud power Amazon web services announced that it was the first to upgrade its cloud platform to include Nvidia’s latest Tesla V100 GPUs.

The Tesla V100s will be available vis P3 instances, and up to eight GPUs can be spun up at any one time per instance, we're told. It’s aimed at handling the most intensive tasks in machine learning, modeling fluid dynamics and molecules, finance, and genomics. At the moment, they’ll only be available in four regions: US East (Northern Virginia), US West (Oregon), EU (Ireland), and Asia Pacific (Tokyo).

It’s difficult to compare the speed up of the P3 instances over the P2 class, since Amazon doesn’t like to answer tricky questions about performance. But more information and specs for the Tesla V100s can be found here.

Coaching your agents Intel launched an open-source framework called Coach for researchers to train and test their reinforcement learning algorithms on via a base of APIs. The handy thing is that it’s been optimized to work in parallel across multiple CPU cores, which is useful for those of us tinkering with AI projects on desktop computers rather than clusters of multi-socket, multi-GPU servers.

It’s mostly written using TensorFlow, however some algorithms can be run on Neon – the open-source framework developed by Intel’s AI arm Nervana. Coach is can be integrated with other reinforcement learning environments such as OpenAI Gym, Roboschool and ViZDoom, a platform specialized for the classic first-person shooter Doom.

What’s HPE doing with AI? Everyone is trying to reshuffle their companies around AI, and HPE is desperate to elbow its way in too with a new set of “platforms and services”.

There is something called the "HPE rapid installation for AI," that includes preconfigured libraries, frameworks and software to optimize deep learning across hardware clusters. It’s built on its Apollo 6500 servers systems, and supports Nvidia Tesla V100 GPUs.

It also has a thing called the "HPE Deep Learning Cookbook," a set of tools to help organizations decide which hardware and software combinations are best for different applications in deep learning.

The “HPE AI Innovation Center” is focused on fostering research collaborations between universities, enterprises and HPE. And lastly, the “Enhanced HPE Centers of Excellence” is more of a consulting service, offering advice to enterprise IT departments on how and where to apply deep learning for the biggest financial impact.

Be careful with AI ethics The Information Technology Council, a US trade association lobbying on behalf of the biggest tech companies has published guidelines warning governments to think carefully before deploying new laws against AI.

Machine-learning algorithms have come under fire for being like black boxes: it's not always entirely clear why neural networks form their particular pathways from their training data. Sure, judging from the inference operations, a network can appear to be sound and make reasonable decisions and judgements. However, it’s difficult to untangle the software's decision-making processes, because non-trivial networks are often too complex for people to analyze and understand. There's also the fear that biases in training data will creep into the networks, meaning AI-powered software used throughout society may be harboring hidden prejudices that will have real effects on people's lives.

But the report urges governments to “use caution before adopting new laws, regulations, or taxes that may inadvertently or unnecessarily impede the responsible development and use of AI.” In other words: don't stop the boffins. ®

PS: It appears people have just realized that iOS 11 comes with machine-learning code built in. Its CoreML-powered image recognition system can label people's photos automatically on their iPhones. Searching for "brassiere" on your handheld can bring up surprising snaps.