This article is more than 1 year old

All the AIs NVMe, says IBM: Claims POWER9s + InfiniBand brainier than COTS

Says X86 doesn't mark the spot... but won't flash its latency numbers

+Comment IBM has claimed its POWER9 gear is better placed to help AIs doing "cognitive" work than your common-or-garden X86 commercial-off-the-shelf (COTS) kit after tests in New York earlier this month.

Big Blue recently ran a "demo" which it said proved it can leave COTS servers and storage in the dust using (what else?) its own proprietary servers linked to its FlashSystem arrays that use low latency/high bandwidth tech such as PCIe Gen 4, EDR and QDR InfiniBand and NVMe over Fabrics.

Of course, there are several NVMe over Fabrics access storage arrays from startups like E8, Excelero, Pavilion and Formation Data Systems, plus a stated intention from Pure Storage, which support accesses from X86 servers.

IBM's display, meanwhile, used NVMe-over-Fabrics InfiniBand (NVMe-oF). IBM has not formally announced support for the NVMe-oF protocol on either the AC922 server or the FlashSystem 900 array but this technology preview would seem to indicate it might.

The demo took place at the AI Summit in New York, December 5-6. In it the AC922 uses a PCIe gen 4 bus, which is twice as fast as the current PCIe gen 3 buses used in most servers today.

IBM showed attendees an NVMe-oF link between its POWER9-based AC922 server and five FlashSystem 900 arrays – saying it indicated slashed data access latency and increased bandwidth.

Woody Hutsell, Big Blue manager for the Flashsystem portfolio and enablement strategy, blogged that the AC922 was "capable of increasing I/O throughput up to 5.6x compared to the PCIe gen 3 buses used within x86 servers".

IBM said this would be ideal for AI that involves ingesting "massive amounts of data while simultaneously completing real time inferencing (object detection)."

Hutsell said the FlashSystem 900 already supports SRP (SCSI over RDMA protocol) using an InfiniBand link and replacing the SCSI code with NVMe code will lower latency further.

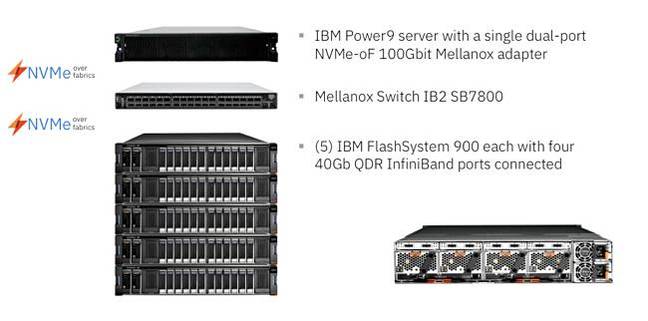

IBM's technology preview set up

In the demo, the AC922 was connected by a dual-ported NVMe-oF EDR 100Gbit Mellanox adapter to a Mellanox Switch-IB 2 7800 – which linked to five FlashSystem 900 enclosures, each fitted with 4 x 40Gbit/s QDR InfiniBand ports.

This setup delivered 41GB/sec of bandwidth, made up from 23GB/sec reads and 18GB/sec writes. The access latency was not disclosed.

IBM said the POWER9 server plus FlashSystem 900/NVMeoF InfiniBand combo delivers the low latency and bandwidth needed by enterprise AI, implying it is better than that capable of being delivered by X86 servers linked by NVMe over Fabrics to other all flash arrays. However, with no latency numbers, it seems difficult to assess this claim.

+Comment: Can a COTS setup match this?

An Excelero NVMe over Fabrics virtual SAN system for NASA Ames showed average latency for 4K IOPs was 199μsec, with the lowest value being 8μsec. The system's bandwidth was more than 140GB/sec at 1MB block size.

This system had 128 compute nodes and so is quite unlike the single server IBM demo. It shows ball park presence though, and maybe a Xeon SP server vendor might hook up with an all-flash array system using PCIe Gen 4, NVMe over Fabrics, and a 100Gbit/s Ethernet link to see what the result is. ®