This article is more than 1 year old

Skynet it ain't: Deep learning will not evolve into true AI, says boffin

Neural networks sort stuff – they can't reason or infer

Deep learning and neural networks may have benefited from the huge quantities of data and computing power, but they won't take us all the way to artificial general intelligence, according to a recent academic assessment.

Gary Marcus, ex-director of Uber's AI labs and a psychology professor at the University of New York, argues that there are numerous challenges to deep learning systems that broadly fall into a series of categories.

The first one is data. It's arguably the most important ingredient to any deep learning system and current models are too hungry for it. Machines require huge troves of labelled data to learn how to perform a certain task well.

It may be disheartening to know that programs like DeepMind's AlphaZero can thrash all meatbags at chess and Go, but that only happened after playing a total of 68 million games against itself. That's far above what any human professional will play in a lifetime.

Essentially, deep learning teaches computers how to map inputs to the correct outputs. The relationships between the input and output data are represented and learnt by adjusting the connections between the nodes of a neural network.

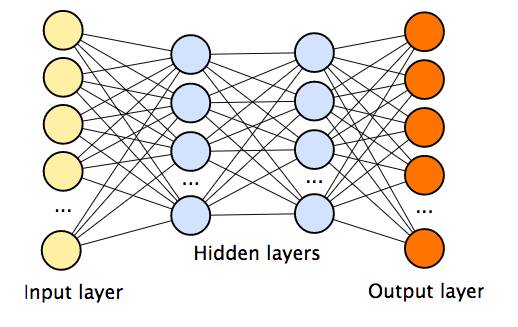

An image of a simple neural network with two hidden layers

Its limited knowledge gleaned from the data given during the training process means it can only perform in limited environments. AlphaZero may be a single algorithm that combines Monte Carlo Tree Search and self-play, a technique in reinforcement learning, but it required two different systems to play chess and Go.

The same skills learnt from one game can't be transferred to another. That's because, unlike humans, machines don't actually grasp important concepts, Marcus said. It may be selecting to move a pawn, or knight, or queen across the board, but it doesn't learn the logical and strategic thinking useful for Go. In fact, it doesn't even really understand what any of those pieces really represent, it just sees the game as a series of rules and patterns.

This brittleness means that current AI systems struggle with "open-ended inference". "If you can't represent nuance like the difference between 'John promised Mary to leave' and 'John promised to leave Mary', you can't draw inferences about who is leaving whom, or what is likely to happen next," Marcus wrote.

Facebook developed the popular bAbI datasets needed to test machine reasoning on text, but current models can only answer questions if the answer is explicitly in the text.

"Humans, as they read texts, frequently derive wide-ranging inferences that are both novel and only implicitly licensed, as when they, for example, infer the intentions of a character based only on indirect dialog," the paper said.

Another good example is to look at how easily AI can be fooled. There are numerous cases where changing a pixel here or there can trick a computer into believing a car is a dog, or a cat is a bowl of guacamole.

The limits of deep learning has led to success being measured in terms of solving "self-contained" problems, such as pushing benchmark scores on known datasets in Kaggle competitions. Marcus goes further and believes that trickier challenges that rely on common sense reasoning are actually beyond what deep learning can do.

"Roughly speaking, deep learning learns complex correlations between input and output features, but with no inherent representation of causality." Understanding causality is vital if machines are to learn to reason.

To make matters worse, researchers don't understand how these neural networks really work. They perform a series of matrix multiplications, but untangling them to understand how these "black boxes" arrive at answers remains an open question. Researchers are racking their brains to come up with ways to make them more transparent so that they can be used more widely in finance and healthcare.

So if you're feeling pangs of existential dread, don't worry. It's unlikely our robot overlords will take over anytime soon. The prospect of artificial general intelligence may indeed arrive one day, but it'll take more than deep learning to get there. ®