This article is more than 1 year old

Audio tweaked just 0.1% to fool speech recognition engines

Digital dog whistles: AI hears signals humans can't comprehend

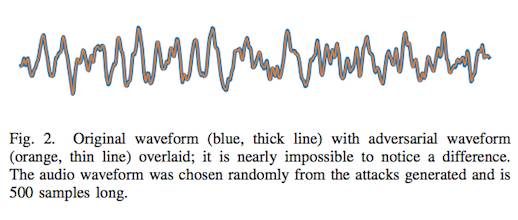

The development of AI adversaries continues apace: a paper by Nicholas Carlini and David Wagner of the University of California Berkeley has explained off a technique to trick speech recognition by changing the source waveform by 0.1 per cent.

The pair wrote at arXiv that their attack achieved a first: not merely an attack that made a speech recognition SR engine fail, but one that returned a result chosen by the attacker.

In other words, because the attack waveform is 99.9 per cent identical to the original, a human wouldn't notice what's wrong with a recording of “it was the best of times, it was the worst of times”, but an AI could be tricked into transcribing it as something else entirely: the authors say it could produce “it is a truth universally acknowledged that a single” from a slightly-altered sample.

One of these things is not quite like the other.

Image from Carlini and Wagner's paper

It works every single time: the pair claimed a 100 per cent success rate for their attack, and frighteningly, an attacker can even hide a target waveform in what (to the observer) appears to be silence.

Images are easy

Such attacks against image processors became almost routine in 2017. There was a single-pixel image attack that made a deep neural network recognise a dog as a car; MIT students developed an algorithm that made Google's AI think a 3D-printed turtle was a gun; and on New Year's Eve, Google researchers took adversarial imaging into the real world, creating stickers that confused vision systems trying to recognise objects (deciding a toaster was a banana).

Speech recognition systems have proven harder to fool. As Carlini and Wagner wrote in the paper, “audio adversarial examples have different properties from those on images”.

They explained that untargeted attacks are simple, since “simply causing word-misspellings would be regarded as a successful attack”.

An attacker could try and embed a malicious phrase in another waveform, but they need to generate a new waveform rather than adding a perturbation to the input.

Their targeted attack mean: “By starting with an arbitrary waveform instead of speech (such as music), we can embed speech into audio that should not be recognised as speech; and by choosing silence as the target, we can hide audio from a speech-to-text system”.

The attack wouldn't yet work against just any speech recognition system. The reason the duo choose DeepSpeech is because it's open source, so they were able to treat it as a white-box in which “the adversary has complete knowledge of the model and its parameters”.

Nor, at this stage, is it a “real time” attack, because the processing system Carlini and Wagner developed only works at around 50 characters per second.

Still, with this work in hand, The Register is pretty certain other researchers will already be on a sprint to try and make a live distorter – so you could one day punk someone's Alexa without them knowing what's happening. Think “Alexa, stream smut to the TV” when your friend only hears you say “What's the weather, Alexa?”. ®