This article is more than 1 year old

Who knew? Fabric access NVMe arrays can work with Spectrum Scale

Parallel access filesystem for disks gets new life

Case study IBM Spectrum Scale (GPFS) started out as a parallel access filesystem for disk-based arrays – so some may have expected it to fall over and die in the face of lightning fast access NVMe SSD and NVMe fabric access arrays.

But instead it seems to be getting a new lease of life as a data manager atop said fabric-access NVME arrays.

An example of this is the way Pixit Media, a UK-based digital video processing software and system business, is adding NVMe over fabric (NVMEoF) access storage to its Spectrum Scale-using software and system suite.

Digital video processing systems deal with massive amounts of data, with video resolution going up to 4K, and both very large files and large sets of small files needing to be worked on by video editing engineers and creative people.

The storage systems have to provide fast access to all this data, both video and frame-based, as well as being able to store older files economically, yet be able to find them, through fulsome metadata tagging, and fetch them from archives quickly.

Pixit Media

Products like Quantum's StorNext and Pixit Media's PixStor provide the depth and breadth of video file asset access and archive storage and management needed.

In this area we might expect NVMeoF arrays to replace existing fast-access storage products, especially ones with a disk-era design base, like Spectrum Scale, but that is not necessarily happening because of workflow integration and metadata handling needs.

Pixit Media exemplifies this with its PixStor product retaining the use of Spectrum Scale, while using an NVMeoF array underneath or alongside it.

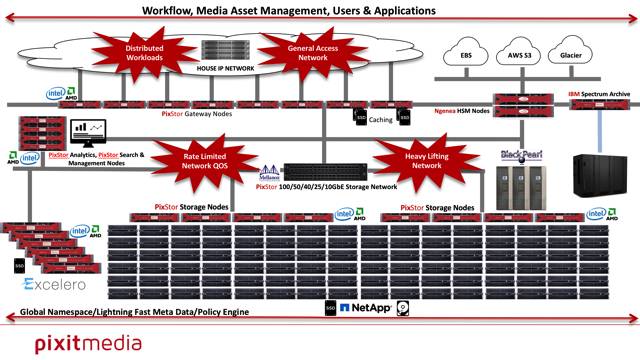

The system set up here is complex, with PixStor gateway nodes and storage nodes for client workstation file access, plus its Ngenea HSM nodes for accessing files in long-term storage such as Spectrum Archive or public clouds:

Pixit Media product use chart

The networking uses lossless Mellanox Ethernet – IP, not Fibre Channel.

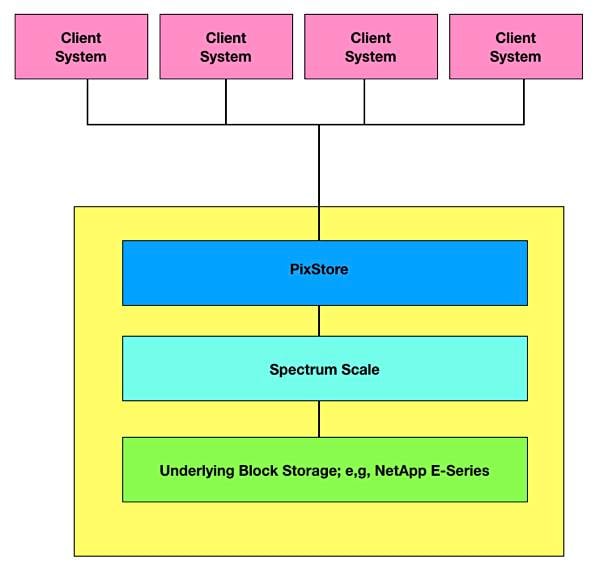

We can conceptually simplify it with this diagram, which ignores the archiving side and collapses the network links, PixStor gateway and storage nodes:

Client systems, running video processing software, work on files that are read from and written to the PixStor. This layer of Pixit Media software uses IBM’s Spectrum Scale parallel access file management software which, in turn, stores its data on underlying NetApp E-Series all-flash storage, in this example.

In essence Pixit Media/Spectrum Scale is the route for both the data control path and the data path. The control path deals with rich metadata, some intrinsic to the files and some added by users to help in file finding, selection, and management.

For files to travel from the block storage to the client workstations they have to traverse the network, which is quickish, being 10/25/40/50/100 GbitE, but still has a network stack, and the IO stacks in PixStor and Spectrum Scale.

NVMe over fabrics and DSSD

Pixit Media said its customers wanted to be able to access and work on larger (and higher resolution) video files, with predictable performance, and that meant the access load on the network and storage systems kept on growing, meaning things slowed down or else vastly more expense was involved to get the performance up.

It had heard about NVMeoF and had a DSSD D5 array – with 10 million IOPS, 100 microsecond latency and 100GB/sec bandwidth from its NVMe drives and fabric – which could cut data access latency by bypassing both the network and storage IO stacks, essentially doing a form of remote direct memory access.

Say yes to the NVMESH?

Then Dell took over EMC and the D5 was cancelled. However Pixit came across Excelero’s NVMESH.

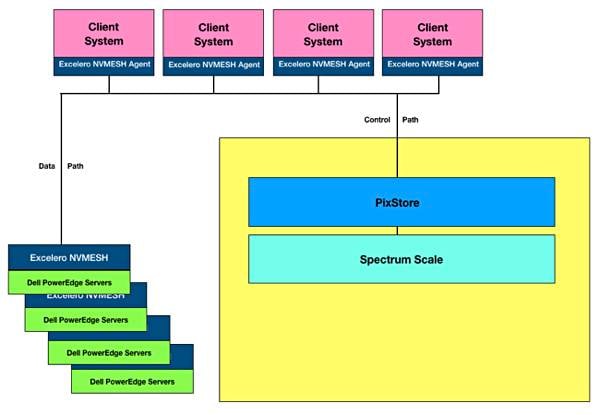

Testing revealed it sped up data access by being used alongside Spectrum Scale and effectively replacing the E-Series array. Here's another greatly simplified diagram to show this:

With NVMESH agent software, an intelligent client block driver on the accessing systems links to the Excelero array, built from Dell PowerEdge server with direct-attached storage. It bypasses their CPUs, going direct to the drives with Remote Direct Drive Access (RDDA) functionality.

Pixit Media MD and co-founder Ben Leaver described this as the data path, with the control path operating through PixStor, and Spectrum Scale.

He thinks NVMe fabric and drive technology represents an affordable and exciting technology advance on what has gone before.

The Pixit people say that, if a 16Gbit/s Fibre Channel SAN was used it would need 32U of rackmounted all-flash equipment to deliver the same performance – 100 x 4K uncompressed raw video streams, as one 2U NVMESH box over a 100GbitE link.

Barry Evans, Pixit CTO said the performance was good enough to deliver 4K Media playback to Apple Mac and Windows workstations over SMB. It had multi-channel functionality coming, he added.

Spectrum Scale and StorNext

Pixit is talking to many StorNext-using potential customers about this comparison.

We understand Pixit has sold an NVMESH system to a customer, who is not ready to go public, with two more in the pipeline, expected to be signed up by the end of June.

NVMe over Fabrics access to NVMe SSD arrays represents a way of getting local PCIe SSD access latency from a shared external array. Using a filesystem layer, like PixStor/Spectrum Scale gives you the data management/control path functions customers need beyond raw performance.

The control path and the data paths are split to accomplish this, and Spectrum Scale survives as a necessary component of a now much speeded workflow.

In theory Quantum's StorNext could go the same way. It could switch to using an NVMe fabric/drive array as its data path-accessed repository for hot data, while continuing to use StorNext for the control path data functionality.

Quantum is certainly looking at flash for StorNext, witness this pitch at the 2017 Flash Memory Summit (PDF), and we'll watch out for it mentioning NVMe over Fabrics in the future. ®