This article is more than 1 year old

Hooray for MLPerf, another AI benchmark competition backed by Google, Baidu, etc

New project to compare the dizzying number of AI chips and models available

A new competition to set benchmarks to test the numerous hardware and software platforms used in AI has been backed by leading technology companies and universities.

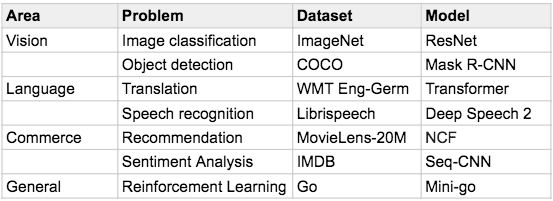

The new project MLPerf is measuring the speed of training and inference times for different machine learning tasks carried out in a variety of AI frameworks and chips. Some of these tasks include:

Google, Intel, Baidu, AMD are some of the top names involved in the latest project. Others include: SambaNova and Wave Computing, both AI hardware startups, as well as Stanford University, Harvard University, University of California Berkeley, University of Minnesota, and the University of Toronto.

The AI community goes gaga for benchmark results. Several research papers are spun off from academics competing with another to build better, more optimised neural networks that are slightly more accurate or faster than the last model.

Gone in 60.121 seconds: Your guide to the pricey new gear Nvidia teased at its annual GPU fest

READ MOREIt’s difficult to keep track of companies and startups building their own chips and the constantly updating software. It doesn’t help that most of it is all coated in marketing fluff. Chip companies often promise revolutionary speed ups without disclosing specs (cough, cough Intel). So, independent experiments in projects like MLPerf or DAWNBench are helpful.

“Benchmarks enhance credibility and demonstrate performance leading to greater use or revenue. If you were making a multi-million-dollar decision about which hardware accelerator or software framework to use, wouldn't you prefer one with published results on a standard benchmark suite?,” Peter Mattson, an Google engineer, told The Register.

MLPerf hopes to spur progress in machine learning for industry and research with fair, reliable benchmark measurements that can be replicated. The comparisons should also encourage competition amongst vendors and academics. Experiments should also be kept affordable to carry out, so that anyone can participate.

Benchmarking is important “in the short term because hardware is a huge investment, both for companies making R&D decisions and companies making purchasing decisions,” Mattson added.

"And in the long term [because] benchmarks enable researchers and vendors to evolve performance in a common direction, unlocking the power of machine learning faster to benefit all of us."

The submission deadline to all the MLPerf tasks is July 31. ®