This article is more than 1 year old

AI servers will need much more memory. And you know who's going to be there? Yep, big daddy Micron

The one whose name doesn't rhyme with schmintel

Analysis Micron has started to separate from Intel in NAND production, just days after whipping the veil off its 7.68TB QLC SSD, and CEO Sanjay Mehrotra and pals are keen to chat up analysts about their vision of the coming "New Micron".

Earlier this week, Mehrotra told an assembly of business-botherers that the markets were transforming toward ever more requirements for memory and storage.

He said: "[With] … billions of devices on the edge, it’s not just your PC and smartphone anymore, it’s also the drones, it’s your smartphone, it’s your smart factory, the smart card, the smart cities, that are all using data to drive the data economy….”

He told listening analysts that AI servers require six times the amount of DRAM and twice the amount of SSDs compared with standard servers

The big game changer here, he told them, is the industry's collective attempts on the deep learning/"artificial intelligence" front – which is "all about massing large pools of data, processing it fast to decipher insights, to deliver value to businesses". He cited natural language and computer vision processing as notable application areas nesting under the loosely termed "AI" grouping. He also called out the usual suspect: autonomous driving.

Mehrotra told listening analysts that AI servers require six times the amount of DRAM and twice the amount of SSDs compared with standard servers.

Micron was talking up the years in clover it expects due to the rising demand in the data centre, mobile devices (thank you 5G), automotive and IoT areas.

NAND that's not all...

Mehrotra spoke of his efforts to fix Micron's previous lagging of industry trends, admitting that in 2D NAND it had been behind rivals in utilisation of TLC memory. Not any more, at least for now: it was first out of the gate with QLC NAND, with partner Intel.

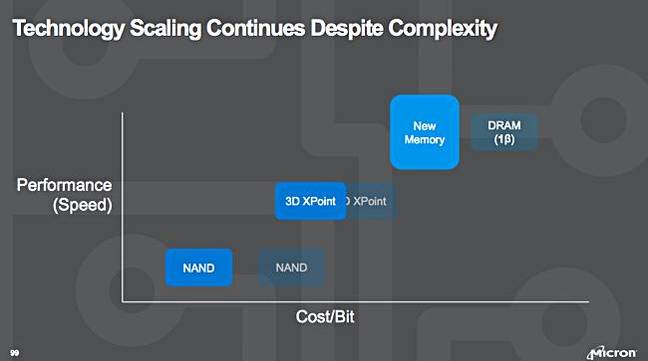

The semiconductor firm said it will push its tech to extend the existing NAND and DRAM roadmaps, advancing 3D XPoint memory and developing new emerging memories. It also promised to deliver high-performance memory (HBM & Graphics) and managed NAND, SSD, and 3D XPoint products.

Describing XPoint, Mehrotra's slide repeated the disputed original XPoint claims: 10X DRAM density; 1,000X NAND endurance and 10000x faster than NAND.

Next year Micron will deliver NVMe client SSDs, it said, as well as transition to Persistent Memory with NV-DIMM-N devices. It also promised 1Ynm DRAM output (first roadmapped here) in the second half of this year, and also talked of future 1Znm process optimisation, 1αnm, technology integration and 1β module integration, saying it had a clear and confident DRAM technology roadmap, with parallel progress on multiple nodes.

DRAM, we've shifted a lot of kit, mumbles profit-munching chip firm Micron

READ MORENAND at 96 layers is in its 3rd Micron generation. Micron claimed 4G 3D NAND would be optimised for performance and scaling with its Replacement Gate (RG) technology, CMOS-under-the array as now with new charge trap cell technology, and leading die size and performance features. The layer count was not revealed – we’re thinking it might be around 128 layers though.

Gen 4 3D NAND will have a greater than 30 per cent write bandwidth increase and a better than 40 per cent decrease in energy per bit compared to the 96-layer, gen 3 NAND.

The CEO claimed the future – 2019 and beyond – would see 3D Xpoint and emerging memory products – Micron being three or more years behind Intel with XPoint products.

A Micron slide shown to analysts claimed Micron's emerging memory product was as fast as DRAM, if not faster – and cheaper. Its technology base was not revealed.

Micron emerging memory slide

Micron, starting to separate from the Intel NAND-making union, appears to be in the process of becoming a stronger semiconductor memory chip and product manufacturer. ®