This article is more than 1 year old

AI, AI, Pure: Nvidia cooks deep learning GPU server chips with NetApp

Pure Storage's AIRI reference architecture probably a bit jelly

NetApp and Nvidia have introduced a combined AI reference architecture system to rival the Pure Storage-Nvidia AIRI system.

It is aimed at deep learning and, unlike FlexPod (Cisco and NetApp's converge infrastructure), has no brand name. Unlike AIRI, neither does it have its own enclosure.

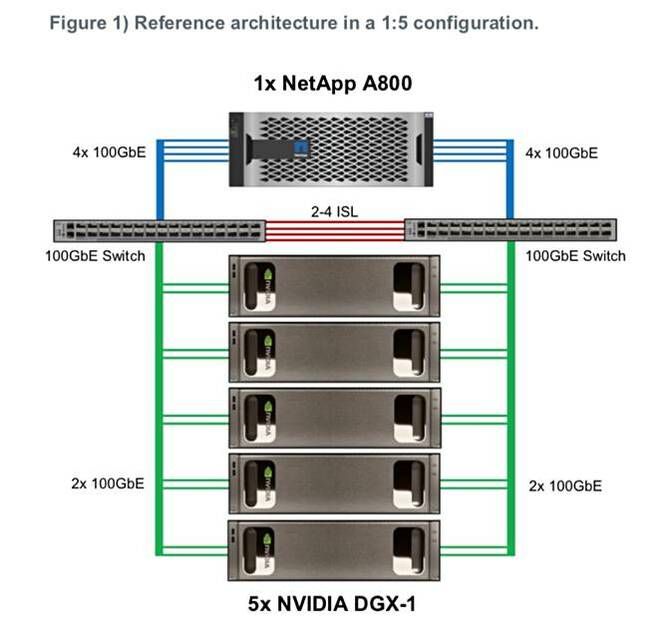

A NetApp and Nvidia technical whitepaper – Scalable AI Infrastructure Designing For Real-World Deep Learning Use Cases (PDF) – defines a reference architecture (RA) for a NetApp A800 all-flash storage array and Nvidia DGX-1 GPU server system. There is a slower and less expensive A700 array-based RA.

The topline RA supports a single A800 array (high-availability pair config) with 5 x DGX-1 GPU servers hooked up across 2 x Cisco Nexus 100GbitE switches. The slower A700 all-flash array RA supports 4 x DGX-1s across 40GbitE.

The A800 system uses a 100GbitE link connecting to the DGX-1, which supports RDMA as a cluster interconnect. The A800 scales out to a 24-node cluster and 74.8PB.

It's said to have a 25GB/sec read bandwidth and a sub-500μsec latency.

NetApp Nvidia DL RA config diagram

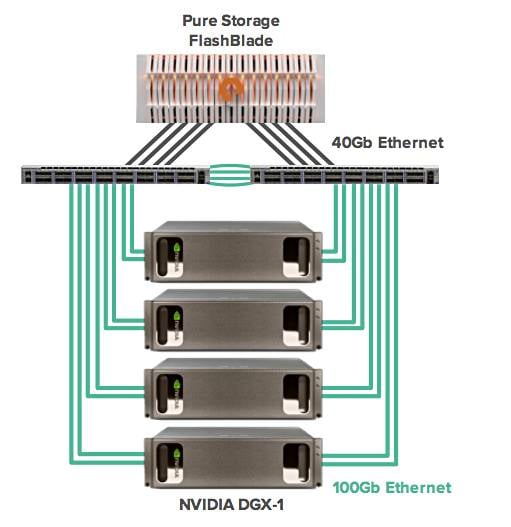

Pure Storage and Nvidia's AIRI has a FlashBlade array supporting 4 x DGX-1s. It offers 17GB/sec from its FlashBlade array which provides sub-3ms latency. This seems slow compared to the NetApp/Nvidia RA system but then the A800 is NetApp's fastest all-flash array, whereas Pure's FlashBlade is more of a capacity-optimised flash array.

The NetApp Nvidia DL RA scales out, like Pure's AIRI Mini, starting out at one DGX-1 and growing to five. The A800 typically has 364.8TB of raw capacity. Pure's AIRI has 533TB of raw flash.

There is an AIRI RA document here and its config diagram looks like this:

Pure Nvidia AIRI config diagram.

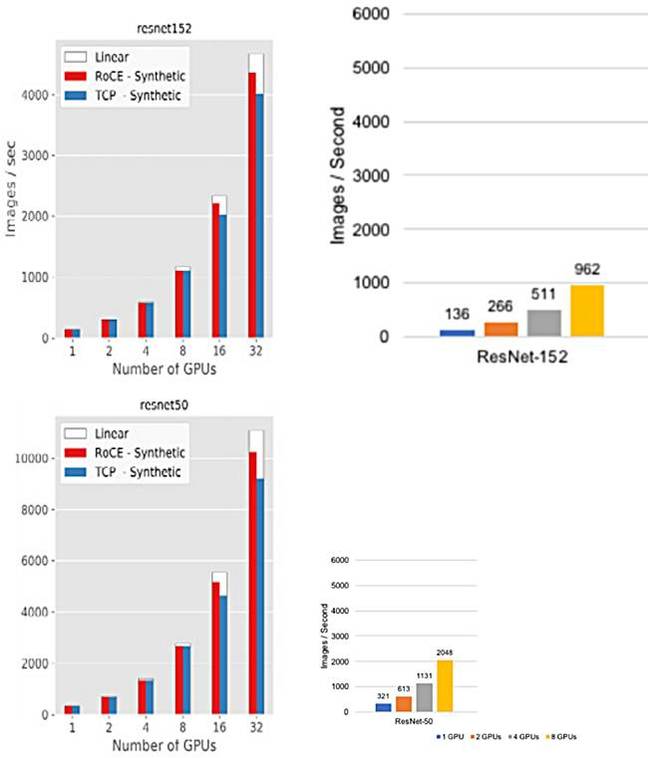

Both NetApp and Pure have run benchmarks of their two systems, and both include Res-152 and ResNet-50 runs using synthetic data, NFS, and a batch size of 64.

NetApp provides graphs and numbers while Pure just supplies graphs, making comparison difficult. Still, we can do a rough and ready estimate by putting those charts next to each other.

The resulting overall chart ain't pretty but does provide a means of comparison:

NetApp and Pure Resnet performance comparison

It appears from these charts, at least, that NetApp Nvidia RA performs better than than AIRI but, to our surprise, not by much, given the NetApp/Nvidia DL system's higher bandwidth and lower latency – 25GB/sec read bandwidth and sub 500μsec – compared to the Pure AIRI system – 17GB/sec and sub-3ms.

A price comparison would be good but no one's talking dollars to us here. We might expect Nvidia to announce more deep learning partnerships like the ones with NetApp and Pure. HPE and IBM are obvious candidates as are the newer NVMe-oF-class array startups such as Apeiron, E8 and Excelero. ®