This article is more than 1 year old

AI engines, Arm brains, DSP brawn... Versal is Xilinx's Kitchen Sink Edition FPGA

Good news: It's 7nm. Sad news: It's shipping 2H 2019

XDF Xilinx has packed everything but the kitchen sink into its new Versal family of FPGAs (field programmable gate arrays).

These are chips that have electronic circuitry you can change on-the-fly as needed, so you can morph their internal logic to suit whatever needs doing. You usually describe how you want your chip to work using a design language like SystemVerilog, which is converted to a block of data fed into the gate array to configure the internal logic.

Typically, FPGAs are used to prototype custom chips before they are mass manufactured, or as glue between other chips by controlling their accesses to memory and peripherals. These days, engineers are eying up using FPGAs as specialist accelerators, performing work such as network packet inspection and machine-learning math, and taking the strain off the host CPU.

Well, Xilinx hopes to lure those engineers with its Versal family, which it launched this week at its developer forum in San Jose, USA. The FPGA designer previously teased the technology in March. The chips will be fabricated by TSMC using its 7nm process node. It's hoped the gate arrays are faster than general-purpose GPU and DSP accelerators, and more flexible and cheaper than manufacturing custom high-speed silicon.

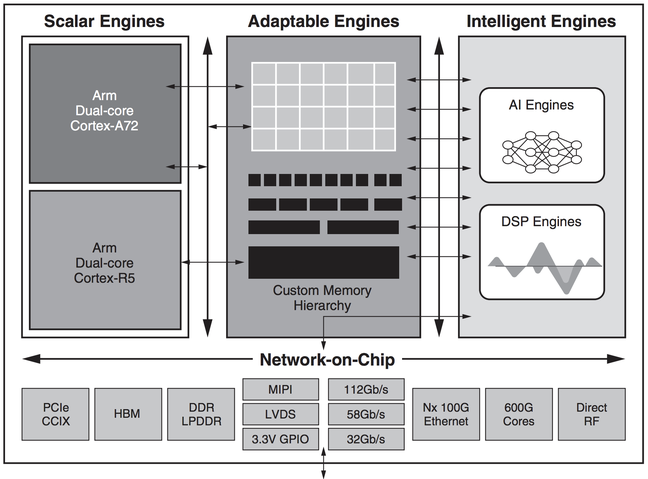

The Versal clan combines a cluster of dual-core Arm Cortex-A72 CPUs, used for running application code close to the offload circuitry, and dual-core Arm Cortex-R5 CPUs, for real-time code, with a big bunch of AI and DSP (digital signal processing) engines, plus the usual programmable logic, and a load of interfaces from 100GE to PCIe CCIX. Both the AI Core and Prime series have a platform controller included for performing secure boot, monitoring, and debug.

Any extra processing you want to do on top of the bundled math and signal coprocessor engines, you can carry out in the reprogramming logic array.

The Versal brand right now comes in two flavors: Versal AI Core and Versal Prime. The former, as you'd expect from the name, focuses on accelerating machine-learning math operations in hardware – think self-driving cars, and data-center neural-network workloads. The latter a more typical super-FPGA with an emphasis on signal processing – think wireless or 5G. Previous Xilinx top-end gate arrays used Cortex-A53 and Cortex-R5s, for what it's worth.

In the above block diagram, the adaptable engines are the fancy names for the reprogrammable logic arrays and on-die memory that can be arranged in hierarchies to reduce latency and increase memory bandwidth to particular engines. The intelligent engines are very long instruction word (VLIW) and single instruction, multiple data (SIMD) processing units that crunch through data.

We're told that the aforementioned flavors will be eventually joined by: Versal AI Edge, for doing machine-learning stuff at the edge of the network down to 5W of power; Versal AI RF, for radio communications; Versal Premium, for serious high-performance applications; and Versal HBM, geared toward products that need high-bandwidth memory.

There will be software libraries and frameworks to program the engines, and hardware designers can still use the familiar Vivado tools to configure the FPGAs. It's hoped people will follow in Amazon Annapurna's footsteps, and produce smart network interfaces using the Versal fmaily. These custom NICs can take on hypervisor networking functions, encryption, and such workloads, on the silicon, freeing up the host CPU and hardware.

Some quick specs, according to Xilinx: the Versal Prime series can have up to 3,080 intelligent engines, 984,576 logic lookup tables, 2.154m system logic cells, topping out at 31 trillion 8-bit integer operations per second (via adaptable logic) or 5 TFLOPs using 32-bit floating-point in the DSP engines (21.3 TFLOPS for INT8).

The Versal AI Core series can have up to 400 AI engines, 1,968 intelligent engines, 899,840 logic lookup tables, 1.968m system logic cells, topping out at 133 trillion 8-bit integer operations per second (via AI engines) or 3.2 TFLOPs using 32-bit floating-point in the DSP engines (13.6 TFLOPS for INT8).

You can check out Timothy Prickett Morgan's analysis, here, of Versal over on our sister site, The Next Platform, along with Nicole Hemsoth's feature on FPGA performance.

Meanwhile, Xilinx has a gentle technical paper on its Versal family here, and specifications of its AI Core series, here, and Prime series, here.

The chips will be generally available in the second half of 2019, we're told, although if you ask nicely, and mean a lot to Xilinx, you can get into its early access program.

Finally, Xilinx announced Alveo, a pair of deep neural-network accelerator cards that use UltraScale+ FPGAs to perform stuff like AI math in hardware, offloading the work from a host processor. Each dual-slot, full-height card has 64GB of DDR4 RAM, and sports two QSFP28 and x16 PCIe 3.0 interfaces, and draws up to 225W.

The Alveo U250 has 1,341K logic lookup tables, 2,749K registers, and 11,508 DSP slices, while the U200 has 892K lookup tables, 1,831K registers, and 5,867 DSP slices. The U250 can perform up to 33.3 trillion operations per second, and the U200 does 18.6, when using the machine-learning inference-friendly 8-bit integer math.

Xilinx claims the U250 and U200 are particularly good for real-time inference in data center servers processing information in the backend, and smoke GPU-based accelerators in terms of performance and latency, and completely blow away host general-purpose CPUs. The hardware is available now, starting from $8,995 apiece. A technical overview is here.

AMD also joined forces with Xilinx to produce a box of eight Alveo U250 cards and two Epyc server processor to form a high-speed neural-network-wrangling that processed 30,000 pictures a second using image-classification AI software GoogLeNet. This is, apparently, a world record. ®