This article is more than 1 year old

In the cloud, Mumbai is a long way from Asia

Net metrics collectors ping performance pain points in a multi-cloud world

Sending packets from Singapore to Mumbai over AWS? Fetch a coffee, the latency is horrible – according to cloud performance data released yesterday.

Metrics outfit ThousandEyes ran tests from network probes deployed into 27 data centres globally, collecting connection data from 55 AWS, Azure, and Google cloud regions.

The firm measured network performance from its user locations to the cloud regions; between two regions of the same cloud; and between different clouds. The network metrics collected were bidirectional hop-by-hop path performance (think: traceroute), as well as latency, jitter, and packet loss.

And yes, some of the results are pretty intuitive – for example, in general it's best to access the cloud region closest to you to get the best latency.

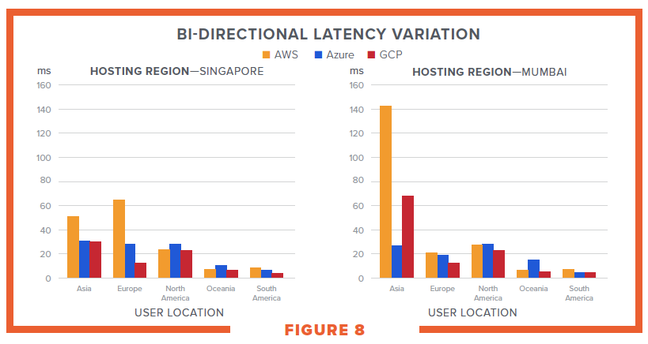

That, however, isn't always true: user-to-cloud latency is dependent both on region and provider, as the image below shows: a user in North America, Oceania (Melbourne, Australia, since that's the only Antipodean user probe), or South America can reach AWS in Singapore faster than users in Asia.

AWS's Singapore region is, at most, 30 milliseconds away from a user probe in America, Australia or Sao Paulo, but on average 50ms from probes in Asia and more than 60ms from Europe.

Mumbai delivered a similarly odd result: both AWS and Google (but not Azure – so well done, Microsoft net admins) are, in latency terms, a very long way away from users in Asia: 140ms and 70ms respectively.

If packet loss is important to you, the news is mostly good: ThousandEyes said loss rates were consistent around the world – except where China's “Great Firewall” is part of the network.

That leaves companies that need high-performance cloud services in China or Taiwan with a dilemma: whether or not to entrust corporate data to local cloud regions.

When it comes to connecting their different availability zones together, the research found, there was little to divide the three providers. AWS passed packets between its availability zones at 0.84ms on average, Azure (with fewer non-US data centres, since it began its offshore builds last of the three) latency between Availability Zones was 1.04ms, and Google managed an average latency of 0.79ms.

There's also good news for customers interested in pursuing a multi-cloud strategy: everybody peers with everybody. As the report noted, “Traffic between cloud providers almost never exits the three provider backbone networks, manifesting as negligible loss and jitter in end-to-end communication.”

Between the AWS, Azure, and Google clouds, jitter was between 0.29 ms and .5 ms, and packet loss was a uniform 0.01 per cent.

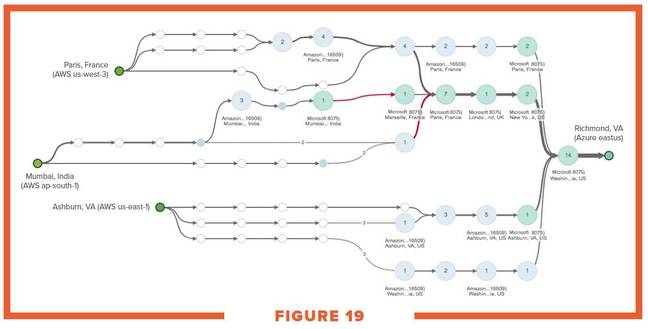

An interesting side detail the researchers turned up in their traceroutes: for inter-cloud traffic, AWS hands traffic to other clouds as close to the origin as it can.

And this is how AWS cloud traffic originating in Mumbai and destined for the Azure DC in Virginia was handed to the Azure network while it was still in Mumbai (rather than, for example, traversing the AWS network to somewhere nearer the destination, such as a peering point in the US).

The researchers said this relieves pressure on the Amazon backbone network, which they speculate is the same network that carries Amazon's shopping clicks.

They note:

AWS architects its network to push traffic away from it as soon as possible. A possible explanation for this behaviour is the high likelihood that the AWS backbone is the same as that used for amazon.com, so network design ensures that the common backbone is not overloaded.

The full ThousandEyes report is available here. ®