This article is more than 1 year old

Well now you node: They're not known for speed, but Ceph storage systems can fly

Flashing seriously

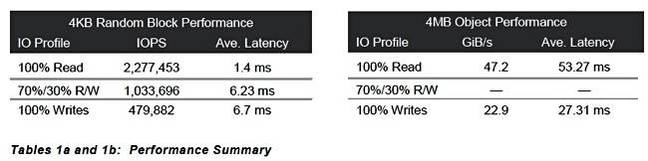

Analysis It has been revealed that open source Ceph storage systems can move a little faster than you might expect. For those who need, er, references, it seems a four-node Ceph cluster can serve 2.277 million random read IOPS using Micron NVMe SSDs – high performance by any standard.

Micron has devised a 31-page Reference Architecture (RA) document to spread the message that its NVMe flash drives can accelerate Ceph, not previously known as a fast-access storage software system.

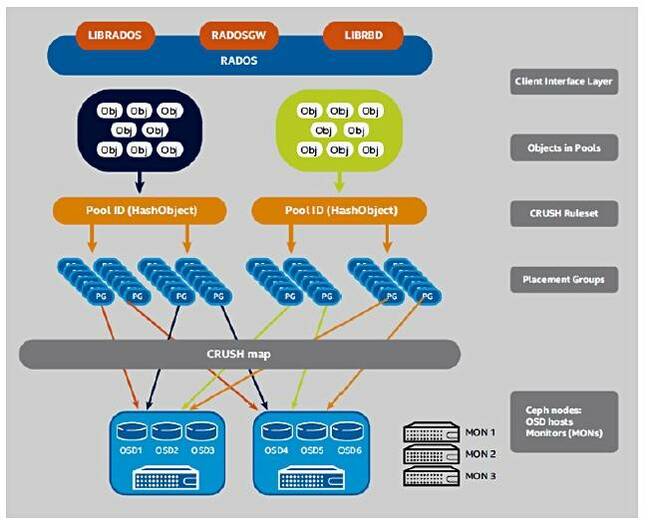

Ceph is open-source storage providing file, block and object storage using an underlying object storage scheme. It's typically built with clustered server nodes for performance, scalability and fault-tolerance. There are object storage nodes and monitor nodes and, together, they provide a virtual pool of Ceph storage.

Ceph architecture - Micron diagram

Product is available from Red Hat as well as SUSE.

The basic RA components are:

- A four-node cluster of dual Xeon Platinum 8166 Purley CPU rackmount servers with 3TB RAM;

- Red Hat Enterprise Linux 7.5;

- Ceph Luminous 12.2.8;

- Micron 9200 MAX 6.4TB NVMe SSDs with 10 per 1U storage node;

- Micron 5100 SATA SSD for OS;

- 2 x Mellanox ConnectX-5 EN 100GbitE Dual Port NICs per node;

- Mellanox ConnectX-4 50GbitE networking to connect clients and monitor nodes; and

- 2 x 100GbitE switches.

The total system takes up 7U of rack space, and you can use it to build a much bigger Ceph store.

It focuses on speeding block performance while also accelerating object IO. Micron has said it should perform as per this table:

Micron Ceph RA performance table

This system should ideally be compared to SAN arrays or object stores using NVMe drives; it will obviously be faster than such stores using disks.

The takeaway is that this RA defines a pre-validated and fully documented system for customers and sellers of the tech to use to build and deploy a quite surprisingly high-performance Ceph system. ®