This article is more than 1 year old

Good news: Microsoft's Azure Data Box Disk is here. Bad news: UK South Storage is having a liedown

The cloud giveth and the cloud taketh away

Updated Microsoft Azure's UK South storage region developed a distinct wobble today.

The company reckons only a subset of customers have been affected by the "availability issue", which began at 13:19 UTC, according to the status page.

@AzureSupport We're getting timeouts/failures both in the Azure portal and in applications (e.g. Azure Storage Explorer) viewing table storage in UK South - any known issues? Blobs, queues and file shares are loading ok.

— Tom Robinson (@tjrobinson) January 10, 2019

Azure Storage is Microsoft's take on a massively scalable store for data in the cloud – stuff like files, messages, NoSQL and so on. Microsoft pitches it as "durable and highly available" unless, it appears, you are making use of the UK South region.

The other region in Blighty, UK West, does not appear to be affected so unless you've shoved all your eggs into one basket, you should be fine. Right?

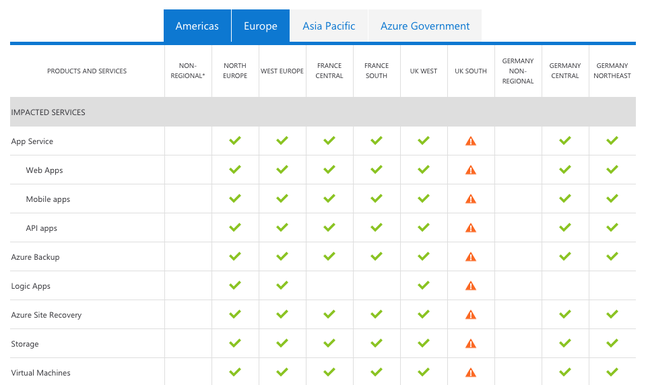

The issue has since spread to the App and Virtual Machines in the afflicted region, according to the status page, and users have begun reporting timeouts when trying to use the cloudy services.

Hey there. We're sorry about the. We do indeed have an outage in UK South at the moment. Could you DM us with which services you are having issues with and you Sub ID and we'll make sure to include you in the communication we sent out https://t.co/ObUanPWteA ^NW

— Azure Support (@AzureSupport) January 10, 2019

The Azure support mouthpiece remained impressively responsive as customers began to notice something amiss within UK South.

Hey there. We are currently having an outage in UK South in which Logic Apps is one of the affected services. If you could DM us your Sub ID we will make sure to including you in the communication https://t.co/ObUanPWteA ^NW

— Azure Support (@AzureSupport) January 10, 2019

The timing is unfortunate, coming a day after Microsoft trumpeted the arrival of General Availability of its Azure Data Box Disk. The box of SSDs (up to five disks of 8TB apiece) allows customers to shunt up to 40TB per order into US, EU, Canada and Australia Azure data centres without having to twiddle their thumbs while bandwidth is devoured.

Plug in (via USB 3.1, or SATA II or III), mount, and then copy copious data before shipping the thing back to Microsoft. Obviously with your own custom passkeys and 128-bit AES encryption.

Assuming, of course, the Azure storage you want to use is actually up, and not sipping a pint of warm, flat beer in some UK pub for the afternoon. ®

Updated to add at 0949 UTC on Friday 11 January

Aaaand, it's back. After a few false starts, Microsoft reckons the tottering UK South Storage service took a few deep breaths and stabilised at 0530 UTC on Friday morning.

Engineers had identified a single storage scale unit with what the company called "availability issue" last night, which led to a number of nodes becoming unreachable. A software error, among other things, was to blame.

The error increased load on the poor thing until it began to topple, impacting anyone with resources depending on it (including Virtual Machines and Blob storage.)

The Azure team fiddled with the code and throttled traffic until the scale unit could recover, some 16 hours or so after the initial wobbling began.