This article is more than 1 year old

First, Google touts $150 AI dev kit. Now, Nvidia's peddling a $99 Nano for GPU ML tinkerers. Do we hear $50? $50?

It only took three fscking hours of keynote to announce it – where's the GPU optimization for that?

GTC It's that time of year again. Jensen Huang, Nvidia’s CEO, paraded a range of goodies for GPU nerds, including a forthcoming credit-card sized AI computer board and software updates for its CUDA platform, during his company's annual GPU Technology Conference (GTC) in Silicon Valley on Monday.

There is nothing that’s too eyebrow-raising, unfortunately. In fact, a good amount of it was stuff previously announced, and upstaged by Intel's earlier announcement that it was, as expected, building America's first exascale supercomputer using its own GPUs and CPUs. Uncle Sam's two fastest known supers use Nvidia accelerators and IBM Power processors.

If you’re looking for new and exciting architectures from Nvidia to train your beefy neural networks at ever increasing speeds, you’ll have to keep waiting. But if you want to tinker with mini AI kit then you’ll maybe want to keep reading.

Here are the highlights of what was new during the big cheese's Monday afternoon keynote:

- Jetson Nano:

- This is a mini AI-focused dev kit, kind of like the Raspberry Pi single-board-computer, aimed hobbyists working on their own modest machine-learning experiments and projects.

The board contains a 128-core 921MHz Nvidia Maxwell GPU to accelerate neural network algorithms and similar mathematics, and a quad-core 1.4GHz ARM Cortex-A57 CPU cluster for running application code. It peaks at 472GFLOPS, we're told, and consumes about 5W to 10W. It also includes 4GB of 64-bit LPDDR4 RAM with 25.6GB/s of memory bandwidth, gigabit Ethernet, USB and various IO interfaces, HDMI output, and a microSD slot for storage. It can run Linux4Tegra.

The $99 (£75) Jetson Nano board is, basically, for enthusiasts, it's available now to order, and Nvidia's hoping it'll be a big hit with the builder community.

Meanwhile, a slightly more expensive $129 (£97) Nano option, said to be more suited for commercial developers working on edge systems and available in quantities of 1,000 or more with 16GB of eMMC storage, should be out in June. They're both set to compete against Google's AI-focused $150 (£113) TPU-based dev board, which finally went on sale earlier this month, and similar kit.

- CUDA-X:

- All of Nvidia’s various CUDA software libraries for data science, high performance computing, machine learning - you name it - has been pulled onto one platform: CUDA-X. All the software packages have been optimized for its Tensor Core GPUs, like the Volta and its newer Titan V series. It's supported by Amazon Web Services, Microsoft Azure, and Google Cloud, in that you can use the libraries to harness GPU hardware in those clouds.

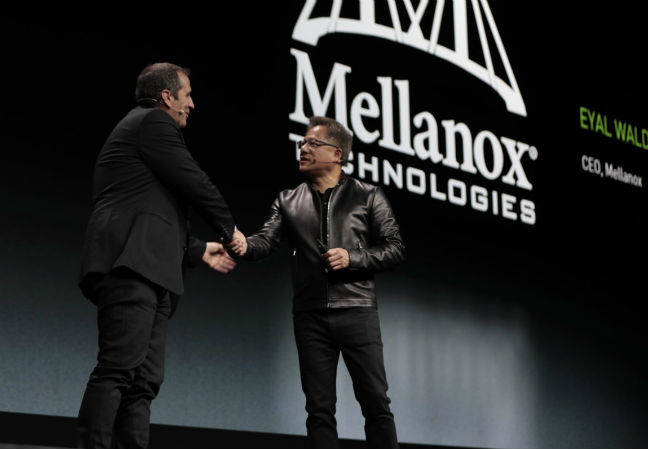

Mellanox CEO Eyal Waldman, who recently saw his silicon craftshop gobbled up by Nvidia for the princely sum of $6.9bn, was on hand during Huang's three-hour keynote to voice his support for his new overlords. He spoke on how Nvidia was pushing hard to get its chips into the bit-barns across the world.

"The program-centric datacenter is changing into a data-centric datacenter, which means the data will flow and create the programs, rather than the programs creating the data,” he enthused.

Thank you for making me rather rich ... Waldman, left, shakes hands with Huang

- Amazon to tease T4 series:

- Nvidia's Tesla T4 GPU was announced last year, it’s geared for inference workloads, and it will be previewed on Amazon Web Services (AWS) within the coming weeks. Powered by Turing Tensor Cores, a Tesla T4 card has 16GB of GDDR6 RAM and 320GB/s of bandwidth. It supports a wide range of precisions across different frameworks including TensorFlow, PyTorch, and MXNet. For 32-bit single precision, it tops out at 8.1TFLOPS, at mixed precision FP16 and FP32 it packs 65 TFLOPS, at INT8 it hits 130TFLOPS, and at INT4 it’s a speedy 260TFLOPS, according to its designers.

AWS's G4 virtual machines can sport one to eight T4 GPUs, up to 384GB of RAM, up to 1.8TB of local NVMe storage, and up to 100Gbps networking. Speaking of AWS, you can also, we're told, use Amazon's IoT Greengrass service to deploy cloud-trained neural networks to Nvidia Jetson-based hardware out on the network edge.

- Isaac SDK:

- Here’s one for the roboticists. Isaac SDK is a developer toolkit to build small robots to complete simple tasks like delivering goods, first announced last year but now made, er, metal flesh.

There’s a robotics engine and a simulator to train bots in a virtual world before they’re deployed as well as perception and navigation algorithms. It’s suited for three different robot designs: Kaya, Carter and Link. Kaya can be powered by Nvidia’s Jetson Nano computer. Carter and Link are larger delivery and logistics bots and are optimised for the Jetson AGX Xavier hardware.

- Safety force field:

- Nvidia’s Drive boffins have come up with a set of policies and mathematics, dubbed the Safety Force Field, that can be used by autonomous vehicles to, hopefully, avoid crashes and injuries.

- Microsoft partnership:

- Redmond's Azure Nvidia GPU cloud instances now accelerate machine-learning code that taps into the CUDA-X-based RAPIDS library from Nv. The Windows giant and chip designer are also teaming up to tout video analytics.

We'll be covering more talks and announcements from GTC this week: stay tuned. ®