This article is more than 1 year old

Lip-reading smart speakers: Just what no one always wanted

Enjoy the silence... while you still can

Something for the Weekend, Sir? Your safe, cosy home is to become a place of weeping and gnashing of teeth. Don't worry, this is quite normal. It's how you will communicate with your next-generation smart devices.

Some 14 years after the publication of NASA-linked research on sub-vocal speech recognition, the genre is currently enjoying a bit of a revival. In the near future, you will acquire the valuable skill to accidentally tell Alexa to buy 400 rolls of toilet paper simply by clearing your throat.

This key paper from last summer, for example, examines the need for "non-acoustic modalities of subvocal or silent speech recognition" to combat the three big problems when communicating with smart speakers: interference from ambient noise; accessibility issues for those with speech disorders; and privacy challenges of having to say everything aloud. The paper goes on to describe methods of recording surface electromyographic (sEMG) signals "from muscles of the face and neck".

It has the potential of a win-win-win situation. Voice recognition-like smart device skills could be made to work in noisy environments, such as factory floors and airport aprons. Those who have undergone laryngectomies might enjoy an alternative means of pseudo-verbal communication. And best of all, that loud-mouthed git on the train might stop yelling to the rest of the carriage about the details of his recent visit to the proctologist.

Plenty of unconventional but real-world non-audio solutions to audio challenges have already been developed for consumers, mind. They just seem to have been caught up in all that VR/AR/MR and health band blah that has unfairly dominated IoT in recent years.

Most memorable of these, from personal experience at least, include headphones that vibrate against your jaw rather than plug into your ears. This enables sound waves to be detected directly by your inner ear while, bewilderingly at first, still allowing you to listen and interact with everyday sounds through your ears in the normal way.

Unfortunately for me, the kind of music I listen to when played on one of these bone conduction headphones produces a vibration against my jaw that feels exactly like having root canal work at the dentist before the anaesthetic has completely settled in.

Audio electronics company Clarion announced a "speaker-free" car audio system as recently as CES last month. This promises to pump out your gnarly banging doomph-doomph-doomph stylee shite using the dashboard as a diaphragm and using a device behind the rear-view mirror to blow sonically enhanced air against your windscreen to turn it into a kind of sub-woofer.

The interior of my car buzzes and rattles enough already, thank you. I only have to change down a gear and its armrests and air vents spontaneously break into what sounds – appropriately – like the middle bit of Kraftwerk's Autobahn.

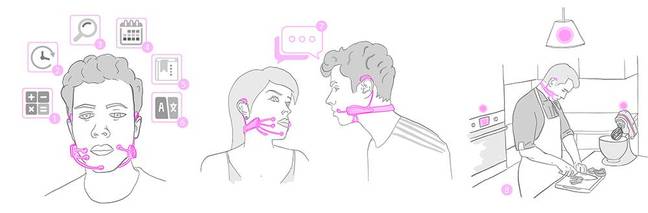

Arguably more compelling are the camera-friendly MIT Media Lab dudes currently getting good coverage with the unconventionally correctly spelt AlterEgo. This project aims to develop a wearable product that "allows a user to silently converse with a computing device without any voice or any discernible movements".

AlterEgo from MIT Media Labs' Fluid Interfaces group.

Image credit: Arnav Kapur, Neo Mohsenvand (click to enlarge)

Just years from now, I'll be wondering how I ever managed to chop vegetables without one.

Employing a system they call "internal articulation", AlterEgo detects slight internal mouth movements even as you keep it closed. It's not as unlikely as it, er, sounds. Often when you are silently reading, these muscles move unconsciously anyway. Audio responses from the computer can then get fed back to the user via bone conduction, as explained earlier.

Not so much as a headset as a jawset, AlterEgo will surely get smaller and less obtrusive, in time (if it is shown to work, of course). And I wonder whether, in time again, the whole headjawset thing will be ditched in favour of room-cam visual recognition of the subtle movements of muscles, tendons and bones around your head. Combined with FaceID-style personal recognition, it should also distinguish which individual in the kitchen is mumbling over the carrots.

Colleagues assure me this should be regarded as a natural progression (disruption?) in consumer-facing technology interface development. Tech businesses relieved consumers of the slavery of having to use a mouse and keyboard by encouraging you to trace and tap on things directly on-screen with hand gestures.

Then they took away the screen, so you could interact using voice recognition whenever you wanted something. It only stands to reason that the next step is to take away your voice and make you use subliminal facial muscle movements instead.

Or, to put it more concisely, they give you the finger, force you to beg, then tell you to shut the fuck up. Nice.

Let's not wait. Me, I am already preparing for the impending silent revolution of ultra-intrusive, camera-in-every-room, lip-reading IoT Hell to invade every crotch and crevice of our lives. This will be a techmageddon of biblical proportions, let me tell you. Weeping and gnashing of teeth.

Now, while I feel fully skilled-up for the "weeping" bit, I'm having trouble with the "gnashing of teeth". How does one gnash? I can't seem to say "gnash" out loud without giggling, at the detriment to my weeping. Perhaps I should just clear my throat instead.

Oops, that's another 400 toilet rolls on the way.