This article is more than 1 year old

And here's Intel's Epyc response: Up-to 56-core, 4GHz 14nm second-gen Xeon SP chips, Agilex FPGAs, persistent mem

Amazing what some competition can do: Kicking Chipzilla up the data cache

In a highly orchestrated global maneuver, Chipzilla today launched, to much of its own fanfare, its second-generation Xeon Scalable Processors for servers – chips previously codenamed Cascade Lake.

A while ago, executives at Intel-rival AMD, which made a big splash of its own with its 32-core Epyc server-class CPUs, told us they were braced for a response from Chipzilla. And here it is.

Besides the customary claims of compute performance increases, the architecture boosts potential memory capacity to 4.5TB per processor, and ups supported DDR4 speeds by about 10 percent, the manufacturer told us. This generation also features built-in deep-learning acceleration, if training robot overlords is your thing.

Standard second-generation Xeon SP SKUs max out at 28 cores and 56 threads – however, there’s also an absolutely ridiculous sibling within this family, called the Xeon SP Platinum 9200 Series, that sports up to 56 cores and 112 threads, and requires up to 400W of juice.

Intel has also revealed a range of FPGAs under the new Agilex brand, previously known as Falcon Mesa – its first new family of FPGAs to be announced since it acquired Altera for $16.7bn in 2015 – and it also took the wraps off its long-awaited Optane DC persistent memory.

Second-generation Xeon Scalable Processors

The latest crop of Xeon SPs offers between six and 56 cores, clocked anywhere between 1.9GHz for the Xeon SP 6222V, all the way to 4.4GHz in turbo mode for the Xeon SP 6244. According to Intel, eight or more sockets are supported, though we imagine most systems will stick at one or two.

According to Chipzilla's marketing bumpf we glimpsed ahead of launch, the more than 50 SKUs on offer were designed in “tight collaboration” with large customers, and, unlike the first-generation Xeon SPs, which the silicon giant describes as general purpose, the new chips have been optimized for specific applications, for example networking and network functions virtualization (NFV), high-density virtual machine hosting, or long life-cycle.

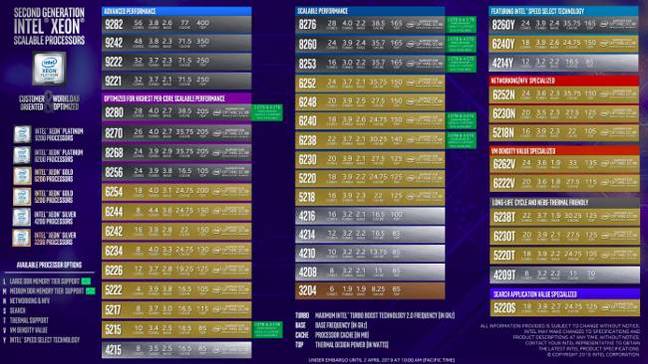

Here's a table of the available parts:

The second-generation still-on-14nm SPs support DDR4 RAM with capacities of 16GB per module. In terms of I/O, we have up to 48 lanes of PCIe 3.0, and up to six memory channels, per processor die. There’s 1MB dedicated L2 cache per core, and up to 38.5 MB (non-inclusive) shared L3 cache per die.

New features also include Intel Deep Learning Boost (DL Boost) – a technology developed to speed up vector compute designed to work alongside the AVX-512 extension – something that will be very useful in machine learning, when using popular frameworks like TensorFlow or Caffe.

The new Xeon SP Platinum 9200 product line deserves a special mention. Intel’s latest top-end processors glue two 8200-series dies into a single BGA package – a big change for Chipzilla. The use of multiple separate dies within a package follows a similar approach taken by AMD in its high-end Zen processors.

This move for Intel doubles the 9200 family's maximum number of memory channels to 12, and doubles up the L3 cache. A dual-processor Platinum 9200 Series system would therefore sport up to 112 CPU cores and 224 threads, and up to 154MB of cache. The 9200 line's BGA connectivity also means you'll have to get a motherboard with the Xeon SP chips affixed to it, rather than in a socket, so check for that when looking around for purchases.

It's worth mentioning that AMD's second-generation 7nm Epyc, codenamed Rome, will sport up to 64 CPU cores and 128 threads per socket, when it eventually emerges.

According to Intel's benchmarking, its top of the line chip, the 9282, can deliver almost two times more performance in LINPACK than the previous generation’s top-end 8180.

Here's a summary of the stand-out second-gen Xeon SP processors:

Platinum 9200 and 8200 Series

9282: 56 cores, 2.6GHz base clock, 3.8GHz turbo, 77MB of cache, 400W

9242: 48 cores, 2.3GHz base clock, 3.8GHz turbo, 71.5MB of cache, 350W

9222: 32 cores, 2.3GHz base clock, 3.7GHz turbo, 71.5MB of cache, 250W

8280: 28 cores, 2.7GHz base clock, 4.0GHz turbo, 38.5MB of cache, 205W

8270: 26 cores, 2.7GHz base clock, 4.0GHz turbo, 35.75MB of cache, 205W

Gold 6200 Series

6254: 18 cores, 3.1GHz base clock, 4.0GHz turbo, 24.75MB of cache, 200W

6244: 8 cores, 3.6GHz base clock, 4.4GHz turbo, 24.75MB of cache, 150W

Gold 6200 Series - network specialized

6252N: 24 cores, 2.3GHz base clock, 3.6GHz turbo, 35.75MB of cache, 150W

6230N: 20 cores, 2.3GHz base clock, 3.5GHz turbo, 27.5MB of cache, 125W

Gold 6200 Series - VM density specialized

6262V: 24 cores, 1.9GHz base clock, 3.6GHz turbo, 33MB of cache, 135W

6222V: 20 cores, 1.8GHz base clock, 3.6GHz turbo, 27.5MB of cache, 115W

All the new silicon has integrated fixes for last year's infamous data-leaking Spectre and Meltdown CPU vulnerabilities – or in the company’s parlance, side channel hardware mitigations. There are a total of six patches here for various types of side-channel attacks, and five have some or all functionality baked into silicon. Intel says these hardware mitigations are expected to have less overhead than the software fixes rolled out for the first-generation Xeon SPs – with general compute performance improving between three and five percent in the manufacturer's own benchmarking.

To be clear, there is an overhead involved in mitigating the design flaws, whether it's in hardware or software. It's hoped that the hardware fixes perform better than the software-only updates. Unfortunately, mitigating them completely would require a complete rethink of Chipzilla's x86 architecture – which is not something Intel is likely to attempt to do.

“They can’t patch all of it, because the only way to completely get rid of it is to completely get rid of speculative execution in caching, and if you do that, your shiny modern Core i7 performs as well as a ‘286,” Allison Randal, software developer and open source strategist, explained at the Open Infrastructure Days event earlier this week.

All the new Xeon SP are available immediately, worldwide, except the 9200s: those are coming in the "first half" of 2019. For pricing, see Intel's Ark when it's finally updated, or beg: for the high-end parts, if you have to ask, you probably can't afford it. A Platinum 8280 will set you back between $10,000 and $18,000, depending on the configuration, for instance, minus your negotiated discount.

Agilex FPGAs

Intel’s new family of field-programmable gate arrays (FPGAs) combines several types of semiconductor tech, including high-bandwidth memory, to accelerate applications, complementing the work of the CPU cores. According to Intel, Agilex devices can deliver either 40 percent more performance than Altera’s Stratix 10 family of FPGAs, or 40 percent less power consumption.

The new FPGAs are fabricated using a 10nm process with 3D system-in-package (SiP) miniaturization technology, and communicate with the CPU cores using Compute Express Link – a high-bandwidth interconnect which reached specification version 1.0 just a few weeks ago,

Agilex FPGAs are positioned for uses in networking, as smart network interface cards, analytics and machine learning, among other applications. They will start sampling in the second half of 2019.

Optane DC

Intel has finally launched Optane DC, its take on persistent, or non-volatile, faster-than-flash, bigger-than-DRAM memory. Optane memory performs almost as quick as RAM, but will continue storing data after the system is switched off, as an ultra-fast high-capacity storage device.

It sounds like a new train line, but no: Compute Express Link is PCIe 5.0 server CPU-accelerator glue from Intel and pals

READ MOREThe goal here is not to replace DRAM, but work together, creating a tiered structure that brings often-used data closer to the processor.

All Optane products are based on 3D Xpoint technology Intel developed with Micron – but the two companies have since experienced something of a cooling of relations, with Micron buying Intel out of their jointly owned manufacturing facility in Utah, USA.

3D Xpoint was initially applied to SSDs, to create Intel’s "ruler" storage format, launched in 2018. Now, a similar type of silicon has been used to build memory.

The new memory sticks come in capacities of 128GB to 512GB per module; they promise up to 8.3GB/s reads, and up to 3.0GB/s writes, and latency that’s a bit higher than RAM but “orders of magnitude” lower than SSDs Optane memory also features hardware-based encryption – something no DRAM device is capable of.

Optane DC is by no means the only type of non-volatile memory on the market, but it’s one of the most elegant solutions: to achieve a similar result, some of its competitors’ have previously equipped DIMMs with batteries, while others combined memory and flash storage on the same stick.

It is extremely important to note that Optane DC memory will only work with selected Intel Cascade Lake processors – not all of them, especially not the high-performance ones – so AMD enthusiasts, and owners of older Intel silicon, will have to look for their NVDIMMs elsewhere.

Finally, Intel also announced its Ethernet 800 NIC Series with speeds up to 100Gbps. ®

More analysis and feeds and speeds on the Xeon SP news can be found on our high-performance computing sister title, The Next Platform, right here, by Timothy Prickett Morgan, and the Agilex news over here, by Michael Feldman.