This article is more than 1 year old

OK, Google. We've got just the gesture for you: Hand-tracking Project Soli coming to Pixel 4

Mobe will watch, er, fingers as well as listen for commands

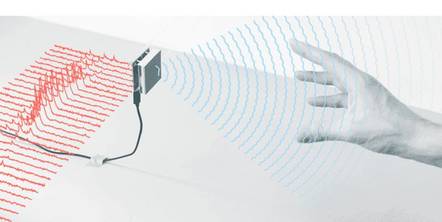

Google will include motion-sensing radar in the forthcoming Pixel 4 smartphone to enable gesture control by waving your hand.

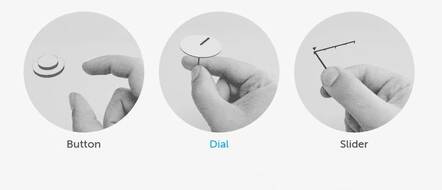

Project Soli uses electromagnetic waves to detect the size, shape, material, distance and velocity of nearly objects. The system is optimised for motion resolution to detect things like finger movements. Examples on the Soli website include sliding your thumb along a finger to simulate a slider control, or twirling a virtual dial by rubbing finger and thumb together.

Google's announcement of Soli in the Pixel 4 is unambitious, saying: "Pixel 4 will be the first device with Soli, powering our new Motion Sense features to allow you to skip songs, snooze alarms, and silence phone calls, just by waving your hand."

Soli will also enable face unlock without you having to look at the phone's camera. When you pick up the phone, sensors will attempt to recognise you with the phone "in almost any orientation". This biometric identification will also work for secure payments and app authentication.

Using face unlock with the phone in any orientation is a plus for convenience. Make it too convenient, though, and it could be vulnerable to other scenarios, such as grabbing someone's phone and holding it close to their face to unlock it. There are claims of this happening among police trying to control protesters in Hong Kong, for example. "As they grabbed him they needed access to his phone and so they tried to actually force his face in front of his phone to use the phone's facial recognition function to get it to unlock," reported journalist Paul Mozur.

In its post, Google refers to Motion Sense as part of its "vision for ambient computing". The idea is that we are surrounded by computing devices with which we can interact in many different ways so instead of thinking about specific computers, we can get help anywhere.

This vision will send a chill through those concerned about privacy and data collection. If devices are ready to "help", they are also listening for keywords or now watching for gestures, and this kind of data collection can have unintended consequences such as audio recordings of private moments being heard by third-party contractors.

Another question for Google is how much value gesture control is adding. Microsoft had high hopes for gesture control for the Xbox games console when it released the Kinect add-on in November 2010. The ability to control an Xbox from a distance without a controller seemed a strong use case, but it turned out that gestures were harder to use, less reliable and less precise.

The inclusion of the Kinect at the launch of the Xbox One was a factor in its relative lack of success versus Playstation 4 since it added to the cost without delivering much value.

Microsoft also uses gesture control in the HoloLens mixed reality device, where it is necessary because there is no keyboard, mouse or touchable screen. It works, but more as a necessary evil than a benefit.

Project Soli might be different. Technology has improved since the days of Kinect and in principle the ability to control a phone with a wave or a wagged finger could make the device easier to use as well as having accessibility benefits. But the execution will need to be excellent. ®