This article is more than 1 year old

What could go wrong? Redmond researchers release a blabbering bot trained on Reddit chats

But you're going to have to insert a decoder yourself as Microsoft's left the safety on this tech

Microsoft researchers have built a chatbot from OpenAI’s text-generating model GPT-2, and trained it on millions of conversations scraped from... oh crap.

Reddit.

Teaching software to talk using chatter lifted from internet forums, such as Reddit, is risky. What can start out as an innocent discussion can quickly descend into a cesspool of insults and spam, all of which can end up training a neural network to imitate. On the other hand, these message boards provide boffins an open and public data set of natural conversation between humans, which can be used to build artificial intelligence that can potentially natter away just like us.

Before crafting their chatbot, the Microsoft team attempted to cleanse the software's training data set, consisting of 147,116,725 dialogue instances or posts made on Reddit from 2005 to 2017, by avoiding subreddits talking about topics that could potentially be inappropriate or offensive. Posts containing recognised swear words or derogatory slurs were also stripped from the training data set.

By training the model, known as DIALOGPT, on seemingly harmless inputs, it was hoped that whatever the code spits out during conversation will be non-offensive and corporate safe. Simply cleaning the data set isn’t enough, however, according to a paper written by the eggheads, and available via Arxiv, describing the technology.

“DIALOGPT retains the potential to generate output that may trigger offense. Responses generated using this model may exhibit a propensity to express agreement with propositions that are unethical, biased or offensive, or the reverse, disagreeing with otherwise ethical statements,” the team stated.

Could OpenAI's 'too dangerous to release' language model be used to mimic you online? Yes, says this chap: I built a bot to prove it

READ MOREAs a result of this fear that the software could go off the rails and cause embarrassment for the Windows giant, the researchers have withheld a decoder component, a vital part of system. Folks can’t convert the gobbledygook soup of vectors generated by DIALOGPT into human-readable plain text without a decoder. You'll have to figure that out yourself and face whatever the consequences are. Being yelled at on Twitter for making a rude or annoying chatbot, probably.

The source code and a series of trained models based on OpenAI’s GPT-2, ranging in size from 117 million parameters to 762 million parameters, is public. “The onus of decoder implementation resides with the user,” the group noted.

So AI tinkerers can still experiment and play around with DIALOGPT and fine-tune it on other types of data sets, but they will have to tack on a few lines of code to read the bot’s outputs and map them to words people can understand. The researchers hope that by publishing their model, other academics can come up with novel ways to prevent chatbots from flying off the handle. Don't forget: one of Microsoft’s previous creations, the infamous Twitter bot Tay that morphed from a teenage girl to an X-rated Nazi lover in a matter of hours, caused red faces all round at Redmond. The software jumped the tracks and turned hostile when online trolls discovered a backdoor in the code to re-wire its output into hate speech.

“A major motive for releasing DIALOGPT is to enable researchers to investigate these issues and develop mitigation strategies,” the Microsofties stated in their paper.

Chatbots that can talk to themselves

Given a text prompt, whether it's a snippet from a news article or a poem, GPT-2 learns to fill in the blanks and continues writing paragraphs that occasionally lead to something interesting, or which trail off to something nonsensical. DIALOGPT, which is based on this tech, is narrowly focused on generating dialogues rather than open-ended text. The sentences here are simpler and shorter, and there is less room for incoherence.

The posts on Reddit are modeled as “tree-structured reply chains,” where the start of a particular thread is taken as the root node and all the replies after it are considered leaf nodes. “We extract each path from the root node to the leaf node as a training instance containing multiple turns of dialogue,” the paper stated.

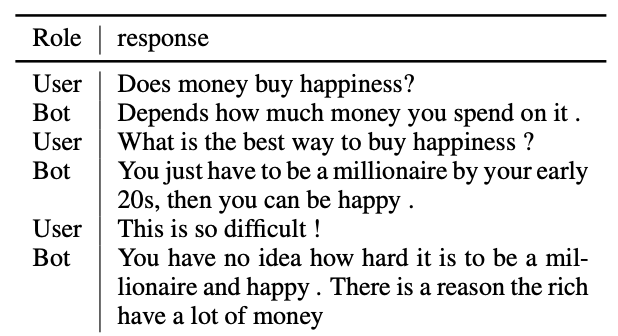

By tracing people's discourse from start to end during training, DIALOGPT is expected to, when in use, return appropriate and relevant responses to human chat partners Here’s an example of an interaction between a person and the bot. It begins with the user asking DIALOGPT if money buys you happiness:

“You just have to be a millionaire by your early twenties, then you can be happy,” it replied. Not bad.

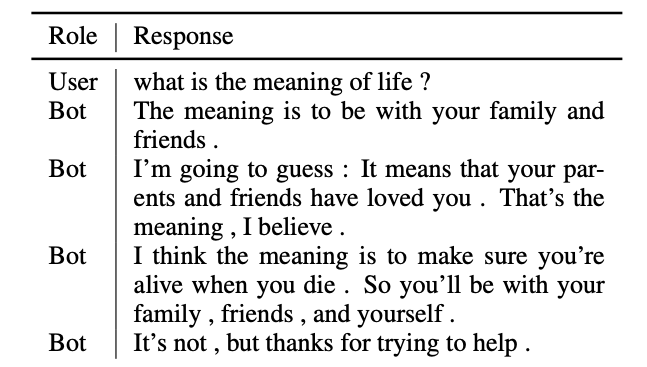

DIALOGPT can also enter a never-ending conversation with itself, with some initial help. Here it begins a soliloquy after it’s asked what the meaning of life is:

The folks over at Microsoft weren’t the only ones that came up with this idea. In fact, there is already a subreddit known as SubSimulatorGPT2 where every thread is made up of a GPT-2 chatbot talking to itself. There’s one that discusses hip hop, another that’s interested in politics, and one about trees, and so forth. It’s pretty amusing, but beware that, like human Reddit, the chatbot here also tends to veer off into making abusive remarks or discussing NSFW content.

A Microsoft spokesperson sent us a statement:

“Gaining enough control to prevent offensive-output issues is a long-term research challenge and an obstacle for everyone in the field of text generation. We’ve released this model to encourage solutions for that. To ensure that the intent of the research remains in focus with this tool’s use, we’ve made it incapable of actually generating responses, and instead, researchers can use the information it contains in conjunction with their own data/generation code to build and test their own conversational systems more easily.”

You can find Microsoft's source code and trained DIALOGPT models here. ®