This article is more than 1 year old

Arm goes off road... map: 5nm Cortex-X1 touted for phone, tablet, laptop processors needing Apple-level oomph

Meanwhile, A78, Mali-G78 and Ethos-N78 announced, too

Arm today will unveil its Cortex-A78 CPU core, lined up for next-gen phones, tablets, and laptops, and go off roadmap with its Cortex-X1, both using a 5nm process node.

It will also announce its Ethos-N78 processing unit for accelerating machine-learning workloads on devices, and its Mali-G78 GPU for high-end handhelds.

Let's take a run through each of the announcements due today.

Cortex-X1: Arm has a roadmap for its Cortex CPUs, ranging from the Cortex-M series of microcontroller-grade cores for tiny electronics to the Cortex-A range that powers a lot of today's smartphones. Arm has, since its early days, focused on embedded electronics and mobile, squeezing the most performance out of its RISC designs while being battery friendly, and its roadmaps reflect that.

If Arm had to pick two out of low power, low cost, and high performance, it would ordinarily pick the first two. And by cost we mean everything from die area of its designs to royalties it charges its customers for its technology.

Manufacturers that license Arm's blueprints for their chips can go one of two routes: they can take an architectural license, like what Apple has, and design homegrown Arm-compatible CPUs from scratch that follow the instruction set architecture; or they can license individual cores and plug them into system-on-chips, and pay a royalty per chip shipped.

That latter route is the one the vast majority of Arm's customers take because, well, Arm has done most of the work in designing the cores. However, that means sticking to Arm's menu of designs. Some customers wanted customized blueprints for their system-on-chips, so Arm allowed some of its clients to license CPU cores, request tweaks to the designs to suit their products, and ship the resulting technology marketed as "Built on Arm Cortex Technology." For example, Qualcomm's Kryo 200-series of CPU cores in its Snapdragon line were semi-customized Cortex-A cores, though the fact they were "Built on Arm Cortex Technology" was, in our experience, hidden in the marketing bumpf.

Talk about a calculated RISC: If you think you can do a better job than Arm at designing CPUs, now's your chance

READ MOREThe Cortex-X1 family is, from what The Reg can tell, pretty close to that "Built on Arm Cortex Technology" program except, as one Arm executive told us, it is "very clear where the technology comes from." Arm wants its Cortex brand up front and center: the resulting X1 CPU cores will carry the Cortex branding.

It all boils down this: if a few clients want something off the standard Cortex-A roadmap, their engineers can chat with Arm's engineers, and get an off-roadmap Cortex design that meets their needs – such as one that prioritizes single-core performance way over battery life. What that means for you and I is system-on-chips in forthcoming phones and laptops that feature a mix of standard Cortex-A CPU cores that balance performance and power consumption, and Cortex-X1 cores that are used in short bursts for demanding tasks when needed, and shoot for desktop-level performance. That prepares Arm system-on-chips for next-gen laptops, high-end phones, and so on.

In other words, it allows Arm and its customers to find "performance points we can agree on," an exec told us, adding: "What if power and die area need to take a backseat to get high single core performance?" That sounds like certain customers (cough, cough, Qualcomm) banging on Arm's door, demanding a super-high-end Cortex CPU core or two for their laptop processors to counter the silicon in Arm-compatible Apple Macs supposedly coming next year. Apple's homegrown CPU cores are known to be high performance, setting a bar for the rest of the mobile Arm world. Laptop-grade system-on-chips in non-Apple gear may need to sport a super-big.LITTLE approach with one or more Cortex-X1 CPUs cores alongside smaller Cortex-As to compete against the microprocessors in Apple's Arm Mac offerings, if they ever appear on the market.

The Cortex-X1 offering suggests, to us at least, that previous customer-specified cores "Built on Arm Cortex Technology" were not quite to Arm's liking; they were not optimized as the blueprint creators had hoped. The Cortex-X1 is a diplomatic way for Arm to find a performance-power-area point off its roadmap that a customer desires and can license, and stick in a system-on-chip within a year. Where it gets messy is how does one customer differentiate their Cortex-X1 from a rival's Cortex-X1. That, it seems, is up to the individual clients: the core will still at the least be known publicly as a Cortex-X1.

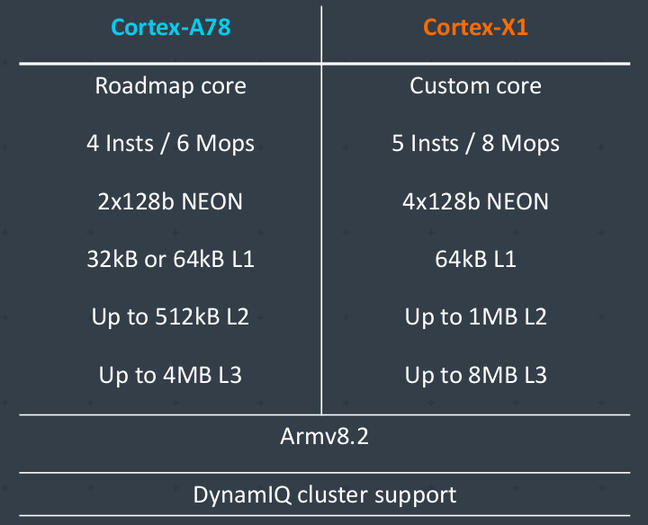

So what can be expect from a Cortex-X1? Below is Arm's table comparing the X1 to the A78, which we'll get to:

For the unsure, that means the Cortex-A78 fetches four instructions at a time from the instruction cache, and six macro-operations (MOPS) from the MOPS cache; the X1 does five and eight, respectively. Both are 64-bit Armv8.2-A CPU cores. The X1 has twice as many NEON SIMD 128-bit engines, four in total, compared to the A78, and more L1 to L3 caches than the A78, as you'd might expect for a custom high-performance part: 64KB of L1 in the X1, versus 32 or 64KB in the A78; up to 1MB of L2, versus up to 512KB; and up to 8MB of L3, versus up to 4MB. To be clear: the X1 is aimed at "client" devices – smartphones, laptops, and so on, not servers, low-end or otherwise.

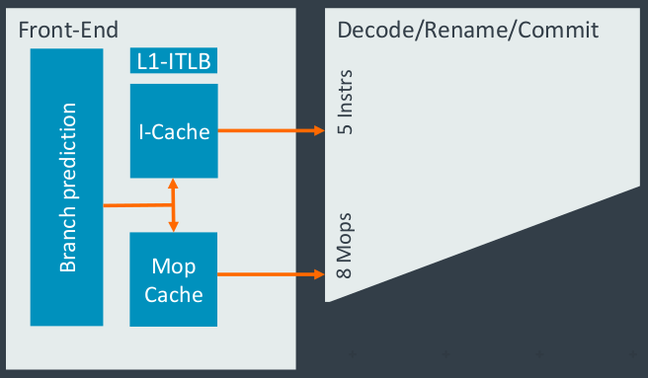

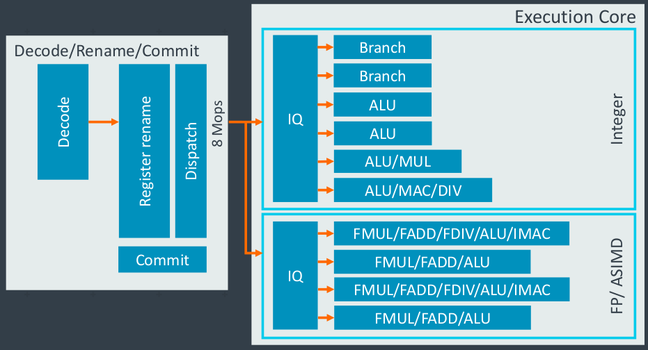

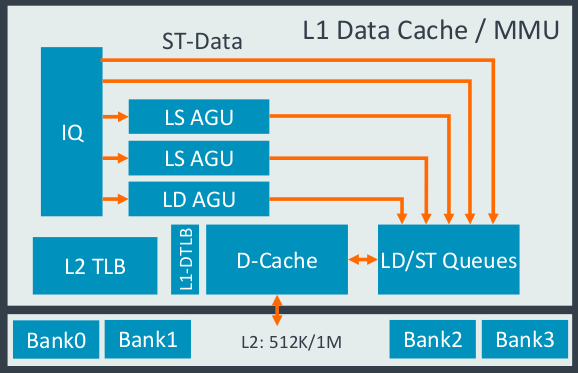

Arm instructions are decomposed into macro-operations in the CPU's decode pipeline stage, which are cached for future use. Below is Arm's breakdown of the X1's pipeline stages:

According to Arm, the Cortex-X1's L0 branch-target buffer has 96 entries with a zero-cycle bubble taken-branch latency, and there are 3,000 entries in the MOPS cache. This out-of-order execution CPU core has a 224 instruction window, and 2,000 entries in the L2 TLB.

Cortex-A78: Phew, we've got that out of the way, so onto the bog-standard, off-the-shelf Cortex-A78 that's a slight downgrade from the X1. Its branch predictor can support two taken branches per cycle, and this out-of-order execution CPU has a smaller window size compared to previous Cortex-A cousins. On the other hand, it has various enhancements, such as a 50-per-cent increase in memory load bandwidth over the A77, and double the memory store bandwidth, shifting 32 bytes per cycle. The A78 also has double the L2 interface bandwidth of the A77.

The A78 is a step up from the A77 introduced last year: nothing spectacularly different, besides the benefits from moving from 7nm to 5nm, just a whole lot of bandwidth improvements and changes to improve its efficiency.

Meanwhile, the Ethos-N78 is supposed to have twice the peak performance of the Ethos-N77, and require less RAM bandwidth by decompressing neural network weight and activation values fetched from memory. We're also told the Mali-G78 has 25 per cent "better performance" than the G77. It has redesigned fused multiply-add (FMA) engines for more efficient processing of graphics and machine-learning calculations, and can run its top-level circuitry at twice the clock frequency of its shader cores, which offers power savings and also means the high-level part of the GPU, which is running faster, can keep the shader engines fed with data to process.

By the time you read this, there should be more technical details about the new cores here on the Arm website. ®