This article is more than 1 year old

You're stuck inside, gaming's getting you through, and you've $1,500 to burn. Check out Nvidia's latest GPUs

Kitchen table chat tries to sell you on the latest kit, AI devs might like it, too

Nvidia launched its GeForce RTX 30 series last night, its latest family of real-time ray-tracing graphics processors aimed primarily at PC gamers.

CEO Jensen Huang introduced three new GPUs: the RTX 3070, RTX 3080, and the RTX 3090 in a video apparently shot from his kitchen. They’re all based on an 8nm Samsung-fabricated cores that squeeze in 28 billion transistors onto the die.

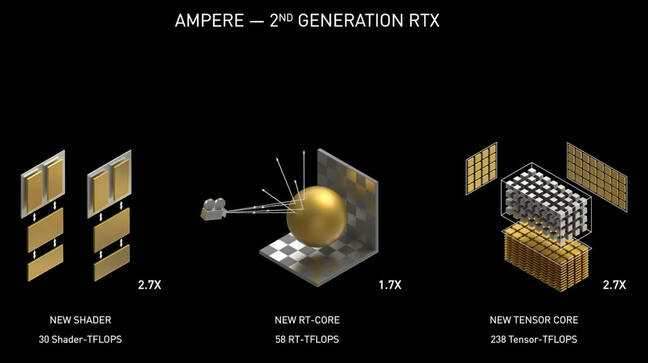

The RTX 30's Ampere processor architecture runs three core functions: render graphics, process machine-learning applications, and execute game engines. Oh, and accelerating storage IO.

“Nvidia RTX fuses programmable shading, ray tracing and AI for developers to create entirely new worlds,” said Huang. You can watch the whole thing here:

You can see the whole thing here.

The three different GPUs vary by price, based on memory and the maximum resolution it can render games at:

- GeForce RTX 3070: The cheapest option at $499 with 8GB GDDR6 memory, capable of running games at 4K and 1440p resolutions. Nvidia said it was 60 per cent faster than the previous RTX 2070 Ti. It’s expected to be available in October.

- GeForce RTX 3080: From $699, this one is the mid-range GPU. It boasts 10GB of higher-speed GDDR6X memory accessible at 19Gbps. Games will look crisp at 60 frames per second at 4K resolution, we're told. The RTX 3080 is twice as fast as the RTX 2080, and will be ready to purchase from September 17.

- GeForce RTX 3090: Nvidia’s top-of-the-range ray tracing GPU, nicknamed BFGPU meaning Big, er, Ferocious GPU. It is for hardcore gamers and streamers willing to spend $1,499 per card. The RTX 3090 comes with 24GB of GDDR6X memory, and is apparently 50 per cent better than the Titan RTX – once said to be the most advanced real-time ray-tracing GPU and based on Nv's previous Turing architecture. It's hot in more ways than one though it has a fan system that is 10-times quieter than previous series and keeps it up to 30C cooler, too, according to Nvidia. It’s capable of rendering 60 FPS in 8K resolution.

The cards come with a port for HDMI 2.1 so gamers can connect their systems to 8K HDR TVs.

Huang said these beefy yet tiny slabs of silicon delivered Nvidia’s “greatest generational leap ever,” compared to its previous Turing real-time ray-tracing chips. “Nvidia RTX fuses programmable shading, ray tracing and AI for developers to create entirely new worlds,” he gushed.

The GPUs run Nvidia's Deep Learning Super Sampling (DLSS) software, which uses a range of AI techniques to touch-up individual pixels for real-time ray tracing. Epic, the creators of the popular (and controversial) first-person-shooter Fortnite said it will use DLSS to render its computer graphics for players online. Other programmers can presumably tap into the chips' performance via Nvidia's CUDA programming framework. ®