This article is more than 1 year old

SiFive reminds everyone you don't always need to offload vector math: Here's a RISC-V CPU that can process it, too

VIU75 core is 64-bit, runs Linux, supports RV vector extension

SiFive, in its ongoing march to help make RISC-V a mainstream processor architecture, will today launch its VIU75 CPU core capable of accelerating vector math.

It's trendy at the moment to offload vector-heavy operations, such as AI algorithms and related analytics, to non-CPU processing cores, typically GPUs and dedicated machine-learning engines, to speed up workload execution. Yet one mustn't forget that Intel and AMD CPU cores have AVX, and Arm has NEON and SVE, to efficiently operate on arrays of data.

In other words, you don't have to offload all vector calculations to an outside unit – if you so wish, it can be done rapidly using vector instructions provided by a CPU core, if such features are available. Doing so avoids having to shunt data out to a separate unit and copy back the result, usually over some sort of bus, and also avoids having to build a separate accelerator into your system-on-chip or computer design. If you have enough CPU cores to take the load, getting it done without any extra specialist hardware may meet your needs. Sometimes, it makes more sense to use a separate unit.

In any case, here we are with SiFive's VIU75: a 64-bit RISC-V (RV64GCV) CPU core that runs application code and implements the architecture's vector extension set of instructions to optimally process arrays of data.

What did they do – twist his Arm? Ex-Qualcomm senior veep joins SiFive as CEO, RISC-V PC for devs teased

READ MOREWe note that right now RISC-V's vector extension, sometimes shortened to RVV, is still in draft form, at version 0.9, and is yet to be signed off by the architecture's official body, RISC-V International. However, SiFive seems confident that by the time you come to license and use the VIU75 in your system-on-chip, the core will support a ratified version 1.0 of the extension.

That ratification means all RISC-V CPU cores that implement the vector extension are compatible at the binary executable level; code that runs on a SiFive RV64GCV core will run on, say, an Andes' RV64GCV core. A number of the RISC-V architecture's cofounders work at SiFive, so they probably know what they're doing here.

There's a fantastic guide to writing RVV code here, by Georg Sauthoff, if you want to tackle the feature at the assembly level. Having said that, you don't need to go that low level to make use of the vector extension: intrinsics have been developed for the GCC and LLVM toolchains, and are expected to be upstreamed, meaning you can access the instruction set extension from high-level code. And LLVM, for one, will autovectorize your code into RVV instructions, meaning you can just write normal, portable source and LLVM will automatically use the right vector instructions at compile time, when targeting RVV. LLVM inventor Chris Lattner now works at SiFive, so again, they probably know what they're doing here.

The European Processor Initiative, which is designing RISC-V-based accelerators for supercomputers and worked with SiFive on RVV software support, has more of an overview of the extension here.

As you'd expect from a vector extension set, it's designed to quickly feed in array data and operate on its elements simultaneously, and output the results in one go. This article by processor design doyen David Patterson and SiFive's chief engineer Andrew Waterman argues RVV is more elegant and efficient than x86 and MIPS' corresponding SIMD instruction sets.

A key quirk in RVV is the fact it supports variable-length vectors, and is generally more flexible than other architectures: you're not fixed to 128, 256 or 512-bit array sizes, for instance, with RISC-V. That means you're not necessarily forced to compute the last few elements of a vector using scalar instructions, or have to discard results, if your array doesn't exactly fit some fixed length. This reduces the complexity of software routines.

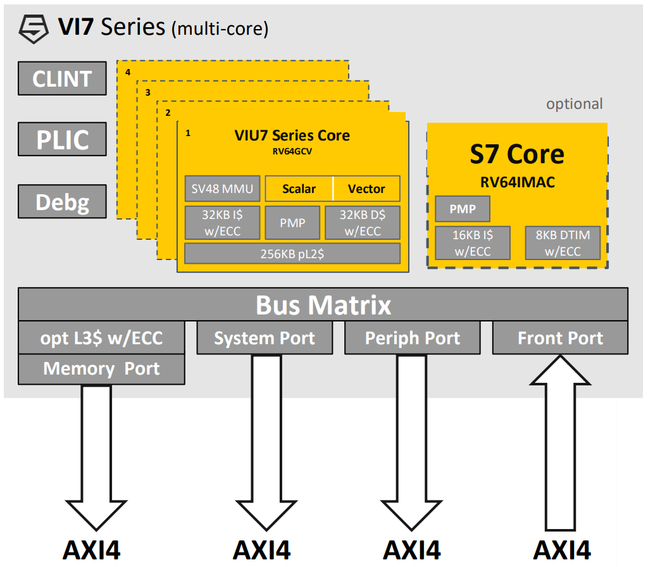

Besides all this, VIU75 is a standard RISC-V application core that can run Linux and other operating systems built for the architecture, has an MMU (SV39 or SV48), and the usual privilege modes: machine mode for firmware-level code, supervisor for kernels, and user for applications. It's described as having a modest eight-stage dual-issue in-order pipeline with decoupled vector unit that can operate on up to 256 bits per clock cycle using 8 to 64-bit data types, which can be floating point, fixed point or integers. Below is an example block diagram of a system-on-chip using four VIU75 CPU cores alongside an optional non-RVV 64-bit core reserved for system maintenance tasks.

CLINT and PLIC are the interrupt controllers, Debg is the debugging interface, and PMP is a physical memory protection unit that provides an extra optional layer of hardware-enforced isolation between CPU cores.

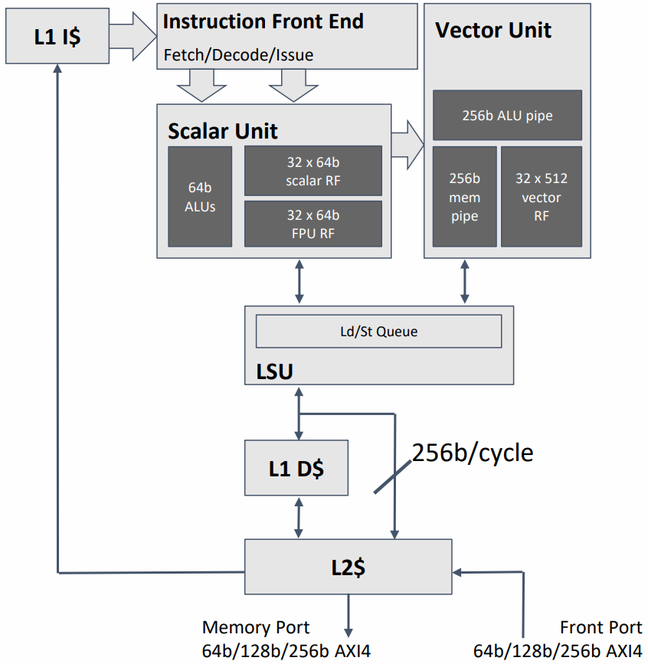

Below is a view inside each VIU75 core. We're told it takes two cycles to complete one 512-bit vector operation, and the L2 cache is treated as primary memory with no load-to-use penalty. SiFive suggests using Xilinx's Virtex UltraScale+ VCU118 FPGA board to evaluate the CPU design. This kit comes with 4GB of RAM, an SD card slot, USB, PCIe, and so on, as well as a substantial FPGA to implement the cores, though bear in mind this is pro-end gear: it'll cost you seven grand.

SiFive will present its VIU75 core today at this year's Linley Fall Processor Conference. Andes Technology will also describe its NX27V out-of-order vector-capable RISC-V processor core, said to be able to hit 96 GFLOPS. ®