This article is more than 1 year old

Six months after A100 super-GPU's debut, Nvidia doubles memory, ups bandwidth

Hardware aimed at supercomputers, servers, mega-workstations

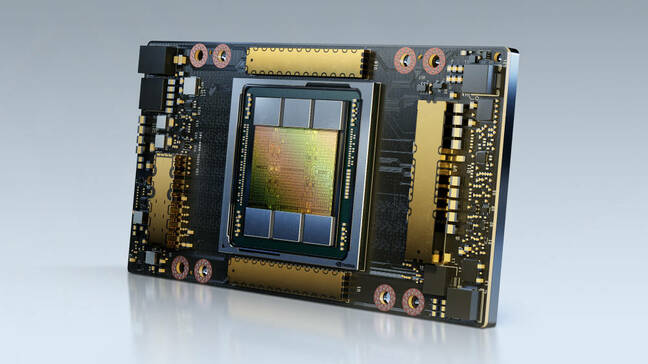

Nvidia on Monday upped the memory specs of its Ampere A100 GPU accelerator, which is aimed at supercomputers and high-end workstations and servers, and unveiled InfiniBand updates.

Compared to the A100 chip unveiled in May, the new version doubles its maximum built-in RAM to 80GB, and increases its memory bandwidth by 25 per cent to 2TB/s. These accelerators are designed to slot inside Nvidia's HGX systems, or as PCIe cards for other boxes, and are expected to speed up math and vector-heavy calculations in high-performance computing and supercomputing applications.

For a deep dive... Our sister site The Next Platform has a breakdown of the updated A100, Nvidia doubles down on AI supercomputing.

“Achieving state-of-the-results in HPC and AI research requires building the biggest models, but these demand more memory capacity and bandwidth than ever before,” said Bryan Catanzaro, vice president of applied deep learning research at Nvidia. “The A100 80GB GPU provides double the memory of its predecessor, which was introduced just six months ago, and breaks the 2TB per second barrier, enabling researchers to tackle the world’s most important scientific and big data challenges.”

Other than the memory and bandwidth increases the 80GB version is pretty much the same as the 40GB one. It has a peak Tensor Core performance of 19.5 TFLOPS at supercomputer-level FP64 precision, 312 TFLOPS at FP32 for training general AI models, and 1,248 TFLOPS for INT8 inference. They are capable of transferring up to 600GB per second of data to other connected GPUs using Nvidia's third-generation NVLink.

You can also get your hands on this gear in the form of DGX Station A100 workgroup servers. A box containing four or eight 80GB A100s will thus sport 320GB or 640GB of GPU memory. If you have an earlier station with 40GB A100s, you can replace them with 80GB chips starting from next year. Nvidia did not reveal how much this would cost, however, in a briefing with reporters. The servers also contain a 64-core AMD Epyc processor, up to 512GB of system memory, 1.92TB of internal storage for the OS and up to 7.68TB for applications and data, and multiple ports for Ethernet and displays. Again, Nvidia didn’t say how much one of these stations will set you back; they’re expected to start shipping early next year.

DGX SuperPODs can be created using 20 to 140 DGX A100 systems. The Cambridge-1, a British supercomputer focused on healthcare research, for example, will be made of 80 DGX A100 systems using 80GB A100s.

Nvidia also announced the Mellanox NDR 400 InfiniBand interconnect, which offers “3x the switch port density and boosts AI acceleration power by 32x,” we're told.

Microsoft will be the first to use the technology, on its Azure platform. “In AI, to meet the high-ambition needs of AI innovation, the Azure NDv4 VMs also leverage HDR InfiniBand with 200Gb/s per GPU, a massive total of 1.6Tb/s of interconnect bandwidth per VM, and scale to thousands of GPUs under the same low-latency InfiniBand fabric to bring AI supercomputing to the masses,” said Nidhi Chappell, head of product, Azure HPC and AI at Microsoft.

The A100 has competition in the form of AMD's MI100 accelerator, which launched this week. ®