This article is more than 1 year old

A little bit of TLC: How IBM squeezes 16,000 write-erase cycles from QLC flash

Healthy block work paves the way

IBM says it has managed to coax TLC-class endurance and performance from cheaper QLC flash chips, with customers of the company's FlashSystem 9200 all-flash arrays getting the benefits.

No one else can do this, according to Andy Walls, IBM Fellow and CTO for flash storage products.

Quad-level cell flash is cheaper to make than triple-level cell flash and increases storage density, but at the cost of performance and endurance. QLC stores 4 bits of data using 16 states. This requires 16 voltage levels, which lengthens IO operations, and means it takes longer to read and write data than TLC. This also shortens endurance, expressed as write-erase cycles.

To overcome this, IBM has developed a controller for its proprietary FlashCore Module (FCM) drives that monitor and classify blocks of flash for health and longevity. Data blocks that are written most frequently are put on the healthiest flash blocks.

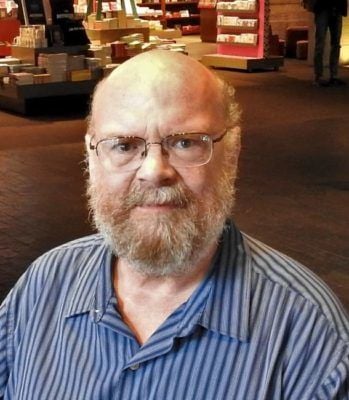

Andy Walls: With QLC we got 16,000 write erase cycles

"Our research guys have developed algorithms that can tell early on how healthy a flash block is going to be," Walls said in a Moor Insights and Strategy fireside chat earlier this month. "By knowing that early I can put data that's changed the most on the healthiest blocks, [and] data that's changed the least on less healthy blocks. We get about a two times increase in endurance by doing that.

"To overcome the performance [limitations], we developed… smart data placement. So early on in the life of the flash we will define it as SLC. As it is SLC, we can hold about 20 per cent of the capacity of the device as SLC only. If you're using compression, that we have built in, and you're getting 3:1 compression ratio: oh my goodness, that's like 60 per cent of the capacity now. You're going to hold a lot of data now and it's just enough SLC you end up getting better performance.

"As you convert to QLC we use Smart Data Placement; we'll put the data that's read the most in the fastest pages. All these things combined help us to overcome the negative effect of QLC."

SSDs are logically made up of flash blocks. These in turn are made up of pages, which are the smallest elements (groups of cells) that can be read in an SSD.

"With TLC," Walls said, "we put [SSDs] on test and got 18,000 write erase cycles; with QLC we got 16,000 write erase cycles. Nobody else is able to do that. That’s two drive writes per day."

Ordinary QLC has 1,000 or so write erase cycles. Overprovisioning can lift this number, but nowhere near IBM's 16,000 level.

SCM, computational storage and composability

In the fireside chat Walls touched on storage-class memory (SCM) and computational storage. Noting that the FlashSystem 9200 FCMs include on-board accelerators to offload the host CPU, he envisaged expanding their functions.

"Today you have servers and you have storage. You can put accelerators on SSDs, those FlashCore Modules, like compression, like simple searches... and other kinds of AI functionality, and only bring in the data you need (to the server CPU so) you can be a lot more efficient."

The FlashSystem 9200 also supports Intel Optane in SSD form where it is used to hold – and provide faster access to – metadata for functions such as deduplication.

Walls predicts the configuration of SCM as external memory (DIMMs) accessed by servers across the upcoming CXL bus. This provides memory coherency, enabling the SCM to be combined with server DRAM. He said: the "CXL bus … opens up whole area of new architectures – you can almost have composable systems – tear down and create new servers instantly."

The Register spoke to Jim Handy of semiconductor industry analyst Objective Analysis, who said of the feat: "These more sophisticated controllers will always be able to get more from less. At the lowest level that means better ECC (moving from BCH to Hamming to LDPC*), to better data placement, to compression, and eventually to systems that are aware of the quality of each NAND block and manage data to align with that health. IBM appears to be there today.

"Something that helps a lot is that NAND makers don't want to test endurance so they specify it extremely conservatively. Endurance tests take considerable time to perform. An example of how conservatively they specify their chips can be found in an old SNIA document I helped to write that had fantastic endurance data measured by Fusion-io. [If you look at figure 2], the Bit Error rate rose to 10-6 only after almost 3 million Program/Erase (P/E) cycles for one vendor and about 7 million for the other, even though they were both specified at 100,000 cycles."

QLC tech leaps in the enterprise

Eric Burgener, IDC research vice president for Enterprise Infrastructure Practice, told The Register: "The two key issues with QLC are endurance and slow write performance, and there are ways to get around both of those issues in software/firmware or through some form of write affinity (ie all writes go to a small layer of much more durable solid-state media like storage class memory like VAST Data does and then later get de-staged to QLC in a manner which very much minimizes writes and garbage collection) that lets QLC economics move more into enterprise storage.

"We have already seen announcements of enterprise storage systems built with QLC from Pure Storage and NetApp, although those are not necessarily targeted at the same types of workloads as IBM’s 9200 (which has a bit more of a primary storage focus), and we are definitely going to see more; Pure’s system (FlashArray//C) is more targeted at less write intensive secondary workloads (backup, test/dev, DR), as is NetApp’s FAS500f. "At this point, through the use of write coalescing and other write minimization techniques, QLC can meet the typical five-year enterprise storage life cycle, but due to enhancements like on Pure’s arrays and much broader use of server-based, software-defined storage platforms, non-disruptive multi generational technology refresh is become more widely viable to extend the useful life of systems beyond five years – it will be interesting to see if QLC will continue to be viable in write intensive enterprise storage environments over periods much longer than five years. I would guess that vendors will continue to improve the efficiency with which they write to solid-state media so that this will not be an issue for QLC.

The IDC analyst said that within two years he expects "we’ll start to see some secondary storage AFAs built with XLC (penta level cell) media, dropping the $/GB costs even further."

A little goes a long way

Objective Analysis's Handy noted that NAND lasts longer "if you write to it 'Just So', and overprovisioning provides a nonlinear endurance benefit – a little bit of extra flash can provide significantly better endurance, and a little bit more will dramatically enhance that number. It costs more to do this, but in IBM’s case it seems to be worth it."

He opined: "So you have three mechanisms that can boost endurance: better controller, better understanding of the medium, and hearty overprovisioning. I suspect that IBM was able to get 16,000 P/E endurance from QLC by providing a healthy dose of all three. They are probably subsidizing the improvements through the money they save by moving from TLC to QLC. Spend less on flash by spending more on the controller and characterization.

"Whenever there's a trailblazer in technology, their work eventually filters down to lower levels, simply because the cost gets driven out over time.

"Although few companies are likely to do characterization, since it has a high human involvement, which is very costly, all of the other techniques that IBM is using should eventually be found in everyday data centre SSDs and eventually in client SSDs." ®

* Various algebraic and graph-based code encoding techniques used to defend against data corruption.