This article is more than 1 year old

Nvidia shrinks GPUs to help squeeze AI into your data center, make its VMware friendship work

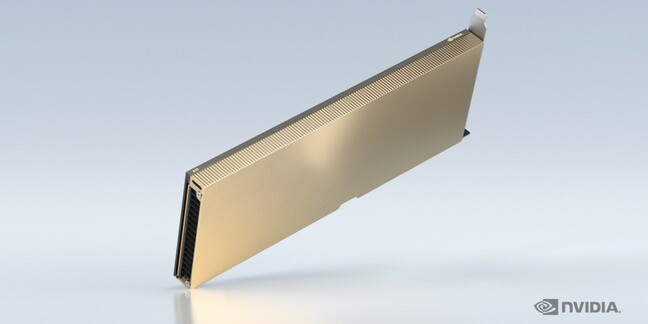

Creates two new mini models because it’s assumed you won’t build silos to host huge hot monsters

GTC Nvidia has created a pair of small data-center-friendly GPUs because it doesn’t think customers will get into AI acceleration unless they can use the servers they already operate.

The new models – the A10 and A30 – require one and two full-height full-length PCIe slots, respectively. Both employ the Ampere architecture Nvidia uses on its other graphics processors. But both are rather smaller than the company’s other GPUs, and that matters in the context of the recently launched AI Enterprise bundle that Nvidia packages exclusively on VMware’s vSphere.

Before VMware got excited about private and hybrid clouds, it was all-in on server consolidation: turning your server fleet into a logical pool of resources instead of tightly coupling servers to specific applications.

Manuvir Das, Nvidia’s head of enterprise computing, told The Register that Nvidia has figured out that in practice big, hot GPUs end up in dedicated hardware that more-or-less tightly couples AI to dedicated servers. Das and Nvidia would rather you run AI Enterprise on the hardware you already have and/or the hardware you buy most of – which is 1U and 2U servers.

Hence the need for smaller GPUs, both to fit into common servers and in recognition that on-prem data centres are under pressure to be densely packed. Most big server-makers (Dell, Lenovo, H3C, Inspur, QCT and Supermicro) are aboard with the A10 and A30, and Nvidia swears its EGX containerised ML platform will be right at home in this environment.

Das said the new GPUs will add $2,000 or $3,000 to the cost of a $12,000 server. Nvidia offered the following specs for the A10:

- TDP: 150W

- FP32: 31.2 teraFLOPS

- BFLOAT16 Tensor Core: 125 teraFLOPS | 250 teraFLOPS*

- FP16 Tensor Core: 125 teraFLOPS | 250 teraFLOPS*

- INT8 Tensor Core: 250 TOPS | 500 TOPS*

- GPU bandwidth: 600GB/s

- GPU memory: 24GB GDDR6

Here are specs for the A30:

- TDP: 165W

- FP32: 10.3 teraFLOPS

- BFLOAT16 Tensor Core: 165 teraFLOPS | 330 teraFLOPS*

- FP16 Tensor Core: 165 teraFLOPS | 330 teraFLOPS*

- INT8 Tensor Core: 330 TOPS | 661 TOPS*

- GPU bandwidth: 933GB/s

- GPU memory: 24GB HBM2 (on-die)

*With Sparsity

Nvidia said the A10 will be available from this month, and the A30 would be available later this year; bear in mind Nvidia’s wares are often hard to find in this ongoing global silicon drought. ®

In other GTC news... Today's the start of Nvidia's 2021 GPU Technology Conference, and as such it has a flurry of things to announce besides the A10 and A30. Here's a couple of highlights:

- Nvidia has designed an Arm-based server-grade processor called Grace, which will use a set of future Arm Neoverse CPU cores. It's aimed at supercomputers and massive AI workloads.

- It also talked up its BlueField 3 DPU which is designed for so-called smart NICs that accelerate software-defined networking, storage and security functions in hardware away from a machine's host processors.