This article is more than 1 year old

HPE debuts storage-as-a-service platform based on a new storage array: Alletra

The race to keep up with AWSs and Microsoft of this world.

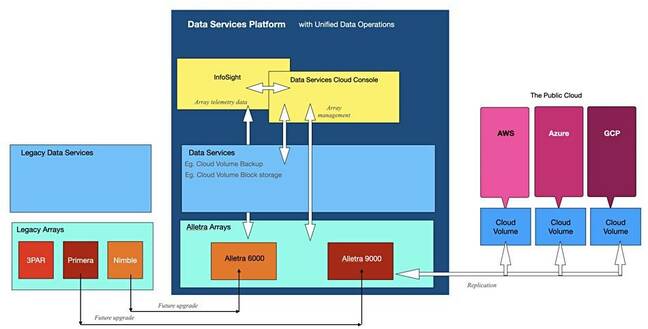

HPE is trying to up its Greenlake public cloud-like game by launching a storage-as-a-service (SaaS) platform with data service software abstraction layers operating on new Alletra storage arrays.

The set-up has a Data Service Cloud Console (DSCC) that has API access to the Alletra arrays so they can be managed in the same way as a customer would look after AWS or Azure storage. This cannot be done with HPE's existing storage kit available via GreenLake, which are managed by storage admin staff directly.

The DSCC provides access to Cloud Data Services, a suite of software subscription services which store, protect and move block data on the all-NVMe flash Alletra systems, and replicate data to Cloud Volumes located on or near public cloud regional data centres.

Tom Black, HPE Storage SVP and GM, claimed: "HPE is changing the storage game by bringing a full cloud operational model to our customers' on-premises environments. Bringing the cloud operational model to where data lives accelerates digital transformation, streamlines data management, and will help our customers innovate faster than ever before."

What is Alletra?

HPE's Alletra is a re-imagining of the Primera and Nimble arrays – as the Alletra 9000 and 6000 product families respectively. They provide on-premises block-level access to all-NVMe flash storage in a 4U chassis, with management, capacity, and data services using that capacity, accessed and available through the online console as if users were accessing public cloud storage capacity and data services.

The Alletra 9000 is for mission-critical use while the Alletra 6000 is a mid-range product. The 9000 has a code-base derived from the Primera arrays while the 6000’s code-base comes from the Nimble arrays.

The 9000, with active-active clustering, has a 100 per cent availability guarantee and features automatic failover across active sites. It can deliver more than 2 million IOPS and support up to 96 SAP HANA nodes with its multi-node, parallel system design.

The HPE 9000 image is from an Alletra brochure, with the box actually labelled Primera A670. (The A670 is a four-controller node, 16-SSD slot chassis which can grow with expansion enclosures.) An Alletra 6000 image in the brochure uses the same Primera A670 shot

The 6000 has a six nines (~99.9999 per cent) availability level with app‑aware backup and recovery, both on-premises and in the cloud.

Each of the two Alletra units comes as a sub-portfolio of systems, such as an Alletra 9080 or 6030, with varying capacities and performance. All are managed by the DSCC. Controller software upgrades are delivered from the HPE cloud and are invisible to users.

Deduplication increases the array's effective capacity and is backed by what HPE has termed its "Store More" pre-sales guarantee (a pledge to store more "data per raw terabyte of storage" than any other vendor's all flash array). Users can set data reduction on or off at a volume level.

HPE says Alletra arrays can support any workload, which The Reg took to mean applications running in bare metal servers, virtualized servers and containerised servers. In other words, Alletra can provision block storage to all three types of workload.

Business continuity is supported by pairing Alletra arrays across metropolitan distances and using synchronous replication to copy data. Two arrays then represent a single highly available repository to hosts.

HPE: Since y'all love cloud subs so much, we'll throw all our boxes into GreenLake by 2022

READ MOREDevelopers connect to Alletra via consistent APIs, whether on-premises or in the cloud. They can dynamically facilitate persistent volume provisioning, expand or clone volumes, and take snapshots of data for reuse.

HPE claims Alletra has seamless and simple data mobility across clouds, using replication to HPE Cloud Volumes, and is claimed to operate in hybrid clouds by design. Data can be flexibly restored from Cloud Volumes to on-premises Alletra arrays with no egress fees.

Alletra array operations are monitored by HPE's InfoSight with predictive analytics aiming to help help to detect and fix problems without the need for support calls. InfoSight uses AI and machine learning and is integrated with the DSCC so users can track Alletra's status. It also promised support calls would go to "Level 3" experts who have access to InfoSight telemetry for a customer's arrays.

Data Services Cloud Console

This is based on the SaaS, cloud-native technology that underpins Aruba Central and has an API usable by applications, partner-led and custom-built data services. It can manage fleets of Alletra deployments, potentially thousands of systems across geographies.

New hardware devices are connected to the network, powered up, and then auto-discovered and activated. Configuration parameters can be pre-defined and automatically deployed with no need for specialist admin involvement.

Cloud Data Services

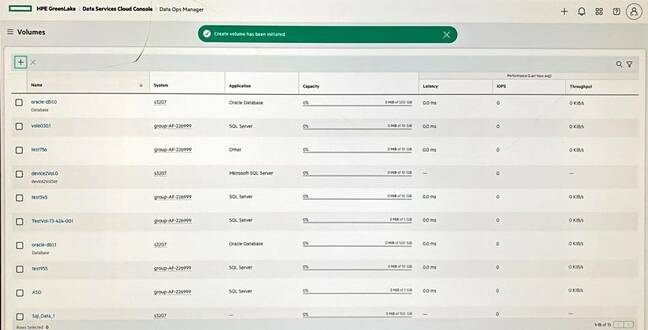

A Data Ops Manager manages the data infrastructure from any device and provides self-service, on-demand, intent-based provisioning of services. HPE said it is "AI-driven" and application-centric and meant to optimise service-level objectives.

Infrastructure services can include deployment, configuration, management of devices and a fleet of devices, software upgrades that are background processes, and optimising resource efficiency using machine learning.

Data services can include provisioning capacity (volumes), data access, protection, search and movement. Volume creation is a four-step process: select workload (eg SQL Server), define number of volumes, set volume size and select a host group.

The provisioning is called "intent-based" as capacity is provisioned in such a way as to optimise SLAs for the particular workload. It is neither manual nor LUN-centric.

We have yet to see a catalogue of the available cloud data services.

Cloud Volumes

The Cloud Volumes scheme has HPE arrays located physically close to public cloud regional centres, and hosted by HPE, so that public cloud-based apps can quickly access data on them. Data is replicated between the on-premises Alletra and near-cloud-located arrays. As far as a public cloud compute instance is concerned the Cloud Volume is presented as a cloud block volume, an EBS volume in AWS for example.

The data isn't actually stored in the public cloud which is why there are no egress fees.

Alletra has container ecosystem integration with the HPE Container Storage Interface (CSI) Driver for Kubernetes. Developers can use the cloud for development and testing with data stored in Cloud Volumes.

The Data Services Cloud Console, cloud data services, and HPE Alletra will be available for order globally direct and through channel partners this month. These products and services are available through GreenLake subscription or through a perpetual license model.

HPE did not provide Alletra data sheets or pricing but did say there is flat support pricing for the life of Alletra.

Comment

HPE has a vision to become an edge-to-cloud platform-as-a-service company. That means its servers have got to become part of the everything-as-a-service game too. Logically, the DSCC or an equivalent entity will need to provision servers as well as storage.

Existing HPE storage customers will need help in this transition to an Alletra-led future. Primera and 3PAR customers should have a migration facility available in a few months.

Nothing has been said by HPE about a 3PAR upgrade programme, nor about how the XP8, OEM'd from Hitachi, and HPE's MSA arrays fit into the scheme.

We understand the block storage focus will be expanded to include file level access, with HPE partners being given API access so they can integrate their file-based offerings with the DSCC. We envisage this could apply to Qumulo and WekaIO, for example.

Logically object storage access will also be embraced by HPE’s storage-as-a-service unified operations design, and we think partners such as Cloudian and Scality will be able to join in as well - eventually.

There is also, El Reg storage desk believes, a missing capacity play here, and disk storage will surely have a role to play. ®