This article is more than 1 year old

What to do about open source vulnerabilities? Move fast, says Linux Foundation expert

The CIO does not decide how soon you need to respond. 'The person who decides is the attacker'

QCon Plus Automated testing and rapid deployment are critical to defending against vulnerabilities in open source software, said David Wheeler, director of Open Source Supply Chain Security at the Linux Foundation.

Dr Wheeler, who is the author of multiple books on secure programming and teaches a course on the subject at George Mason University in Virginia, US, was speaking at the QCon Plus event under way online this week.

How bad is the problem?

Wheeler referenced a 2021 report by software security and IoT (Internet of Things) company Synopsys which said there are an average of 528 open source components per application, that 84 per cent of codebases have at least one vulnerability, and the average number of vulnerabilities per codebase is 158. This was based on an audit of 1,546 codebases, where a codebase is defined as "the code and associated libraries that make up an application or service."

Wheeler said "I want to emphasise that software is under attack."

- When software depends on a project thanklessly maintained by a random guy in Nebraska, is open source sustainable?

- Open-source bug bonanza: Vulnerabilities up almost 50 per cent thanks to people actually looking for them

- Open Source Vulnerabilities database: Nice idea but too many Google-shaped hoops to jump through at present

- 'We're finding bugs way faster than we can fix them': Google sponsors 2 full-time devs to improve Linux security

It is not quite as bad as it sounds, since not all vulnerabilities are exploitable. "Just because there's a dependency that's a vulnerability, does not mean it is exploitable in your situation," said Wheeler.

The vulnerabilities may be in features of a library that the application does not use, or where malformed data cannot reach. "However it can be very difficult to determine whether something is a vulnerability… although you may think it is not exploitable it is very difficult to verify," he cautioned.

'The many eyes theory does work'

Is open source or proprietary software more secure?

"The correct answer is neither," said Wheeler. "If you care about security you evaluate the software." Nevertheless, Wheeler said that open source is potentially more secure because of the long-standing secure software design principle that "the protection mechanism must not depend on attacker ignorance," as explained in a paper by Jerome Saltzer and Michael Schroeder in 1974.

This gives open source software an advantage. "The many eyes theory does work," said Wheeler.

Failure to update

A large part of the problem is that vulnerable software does not get updated. "It's well-known that known-vulnerable reused software is a serious problem today. Many applications, many systems fail to update all the components that are used within them," he said. This is true for closed source as well, but "there's a lot more open source software in use."

What is the solution? There is no single answer, but Wheeler offered numerous practical suggestions. Developers, he said, should "learn how to develop and acquire secure software," referencing a number of courses, best practices and tools, some of them free.

A course is not a huge time commitment, he said, and might take just a day and a half. A Linux Foundation "CII [Core Infrastructure Initiative] Best Practices" program specifies a number of requirements for software projects including that static analysis tools must be used and that there must be an automated test suite.

Unit testing is essential, but Wheeler noted a weakness of test-driven development is that the model of writing a test, and then the code to make the test pass, does not include negative tests, meaning that "you need to test to make sure that things that shouldn't happen, don't happen ... a failure to include negative tests is one of the big problems in a lot of test suites today. It's how the Apple goto fail vulnerability happened, said Wheeler, referencing this issue.

He recommended caution with little-used software. "If there are no users, it's probably going to get no reviewers. No use is a problem," he said. The solution if it is still needed is to "look at it yourself."

The programming language does make a difference

"There is no language that guarantees no vulnerabilities. That said, there are certain vulnerabiliites that are common in certain languages. C and C++ are not memory safe. In most languages, trying to access an array out of bounds will immediately be caught. Not so in C. That will turn into an instant potential for vulnerabilities… C and C++ have a huge number of undefined behaviours," Wheeler said.

What about the performance overhead of safer languages? "Rust is pretty impressive that way because the performance penalty is relatively small. In general there is either no or very little penalty."

In some cases, Rust may perform better because "the Rust model makes it much easier to write parallel code without fear," he said. There are other high performance options, he added, including Ada and Fortran. Fortran is "incredibly fast," he said.

The CIO does not decide how fast you need to repair it. Your process does not decide. The person who decides is the attacker

Once a vulnerability is discovered, how long do businesses have to update? "The CIO does not decide how fast you need to repair it. Your process does not decide. The person who decides is the attacker. The attacker decides when you need to fix it," said Wheeler.

"I run a project where we generally update to production in a day, and we try to do it in an hour. You have to move faster than the attacker… I realise that's a hard bar for many organisations. You have to respond and deploy faster than the attacker can exploit… You are on a clock, and the clock is not measured in months."

The implication is that effective continuous delivery is not only good for business agility, but also essential for security. "You should have everything, all your automated tools, in place," said Wheeler. "Now is the time to fix your test suite."

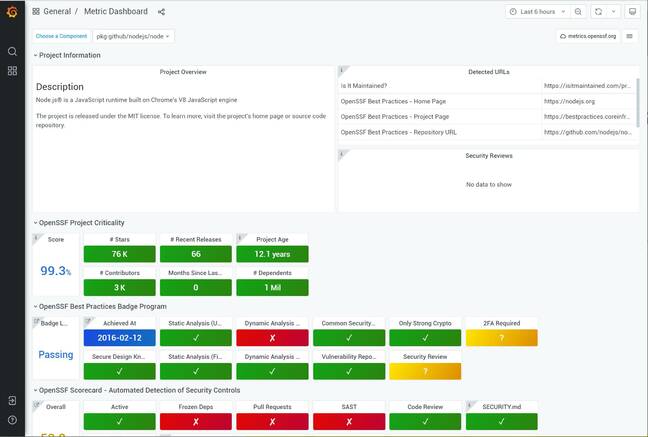

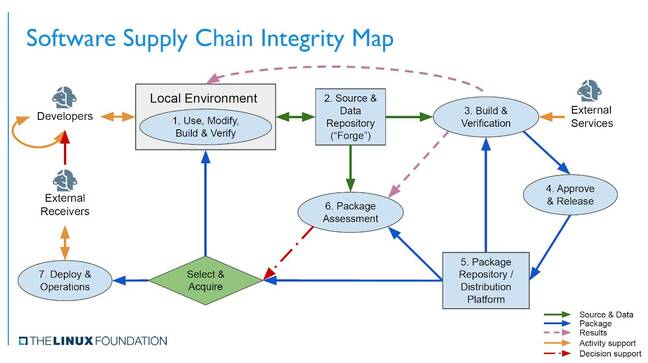

The issue is challenging, but Wheeler said there are initiatives that may improve matters. He mentioned the SPDX project for specifying the "bill of materials" that are used by a software library or applications, and the Open Source Security Metrics (OpenSSF) dashboard which helps developers and users assess the security of specific packages, though it is in its early stages. He also mentioned the trend toward reproducible builds.

"I think we'll see a lot more verified reproducible builds… the idea is that if you can rebuild from the same source code and produce exactly the same resulting executable package, with multiple different independent efforts, it's much less likely that they were all subverted."

Debian project has made significant progress with this for Bullseye, the next major release. ®