This article is more than 1 year old

Robots still suck. It's all they can do to stand up – never mind rise up

Simultaneous localisation and mapping is hard so for the foreseeable future ’bots will remain bolted down

Feature Just after lunch on a sweltering summer day in Brisbane, Australia, a dozen scientists and engineers gathered to watch a dog named Bingo stand up and trot gingerly towards a man-made tunnel. At the entrance, Bingo stopped to 'think' for a minute or so before turning its body to walk inside.

Bingo was then joined by four siblings, and together they were asked to navigate a series of obstacles to map out the course and locate a series of objects.

Without any human help.

Which wasn't easy – because Bingo is a robot.

Bingo and her brood were being trained by a team of scientists from Australia's Commonwealth Scientific and Industrial Research Organisation (CSIRO) ahead of the latest robotics competition run by the USA's Defense Advanced Research Projects Agency (DARPA).

The competitions, held irregularly since 2004, are credited with spurring many of the latest innovations in automation and robotics, including the development of autonomous cars. Waymo, Alphabet's self-driving car outfit, was spun off from the winners of the 2005 competition.

DARPA's current three-year competition has set the bar higher. Previous editions required vehicles and robots to run through courses and avoid known obstacles. This time robots will be required to complete a simulated rescue mission and guide themselves through a new and unknown terrain.

Along the way the robots will be asked to find several objects, including a backpack, a mobile phone, and a heat-emitting mannequin representing a human, before reporting the information back to mission control. A human operator can set priorities and compile the data the robots send, but the robots must surmount any obstacles and work through any problems they encounter by themselves. The winners of the competition will share in a prize pool of $3.5m.

They work among us

The new competition is conceivable thanks to a generation of more sophisticated robots that are now coming to market. Boston Dynamics, the darling of the robotics industry, is already selling Spot, its mechanical dog model, for $75,000 a pop.

Ghost Robotics, a spinout from the University of Pennsylvania's famed robotics lab, sells its mechanical dog model to enterprises and government agencies, including the Australian Army and the US Air Force. Digit, a human-like robot that can carry loads of up to 20kg, is sold by Agility robotics for $250,000.

Meanwhile, robots are common in commercial settings.

Research firm RoboGlobal predicts that by the end of this year there will be more than 3.2 million robots in factories around the globe – double the number in use in 2015.

Most are what robo-boffins call "dumb robots" – like the stationary arms programmed to perform specific, repetitive tasks. The objects they handle are at known locations, or they arrive at predictable speeds on a conveyor belt.

Automated guided vehicles, such as driverless forklifts, are common in modern warehouses but need dedicated infrastructure to operate properly. One wrong QR code, or a shelf that's a few millimetres outside a set tolerance, and they struggle.

Newer robots like Bingo are different because they are have onboard navigation and processing capabilities and can therefore interact with the world around them autonomously to navigate a factory full of workers and other machines or handle simple objects like a door handle.

Do Bingo and her ilk herald the arrival of ubiquitous 'bots that do our dirty work – be it folding laundry, walking the dog, or digging coal?

They can still do what boffins call the three Ds: Dull, Dangerous, and Dirty jobs that are better performed by robots rather than humans

Sadly, demos of robots moving seamlessly through rough and crowded terrain remain a pleasing glimpse of a fantasy future. Autonomy technology remains well short of widespread use.

CSIRO's test run is a case in point. The challenge before Bingo sounds simple: the robots need to navigate a series of obstacles that wouldn't trip up a human child and scout out several objects. They don't need to use them – just locating them is enough. Yet by the end of the hour test run that The Register attended, all five robots were out of service and only a third of the objects on the course had been logged. One robot, named Kitty, upended itself in a ditch. Bingo got lost, and lost communications with the team outside. A flying drone robot malfunctioned and wasn't even able to enter the course.

Yet at the post-test debrief, CSIRO staff considered this a decent result.

"Not many people think of walking through a doorway without hitting the walls as sophisticated. But for robotics this is cutting-edge stuff," said Navinda Kottege, the CSIRO team's leader.

- Hyundai takes 80 per cent stake in terrifying Black Mirror robo-hound firm Boston Dynamics

- When humanity perishes in nuclear fire, the University of Essex's radiation-resistant robots will inherit the Earth

- What happens when back-flipping futuristic robot technology meets capitalism? Yeah, it's warehouse work

Robo-boffins are not alone in their struggles. Although most big logistics companies use robots to move inventory around, the so-called last line, which handles the final picking and packing of items to and from delivery vehicles, is still handled by humans.

Amazon ran a "pick and place" challenge for three years, asking teams of roboteers to retrieve random known objects from warehouse shelves. The competition ended in 2017 with human workers coming out on top. Walmart, the world's biggest retailer, recently cancelled a five-year contract with robotics firm Bossa Nova to use its robots to check inventory levels in stores. The retailer has reverted to using human workers.

SLAMming against walls

Boffins told The Register that two key challenges are proving hard to solve.

If a robot fails when it is vacuuming your floor, that's not a problem.

The first is teaching robots how to perceive the world around them. "Dumb robots" work because they rely on external infrastructure and are only asked to detect and interact with specific objects in specific settings. An automated guided vehicle in a warehouse might give the impression that it is autonomously whizzing around a warehouse, but it is practically blind. The device is programmed to run a predetermined course through the warehouse floor to place a certain item on a certain shelf.

Autonomous robots don't get the luxury of support infrastructure, and must instead adapt to the environment. To do this, they need to be able to detect both the objects they are built to interact with, but also the world around them – so they can make decisions about how they should behave.

But programming robots to see and understand their surroundings is hard because it requires simultaneous localisation and mapping (SLAM) – mapping a new location, including potential hazards, at the same time as the device moves through that location.

SLAM creates a chicken-or-egg problem: how can 'bots navigate and interact with objects in a setting when they don't know their own position in that setting?

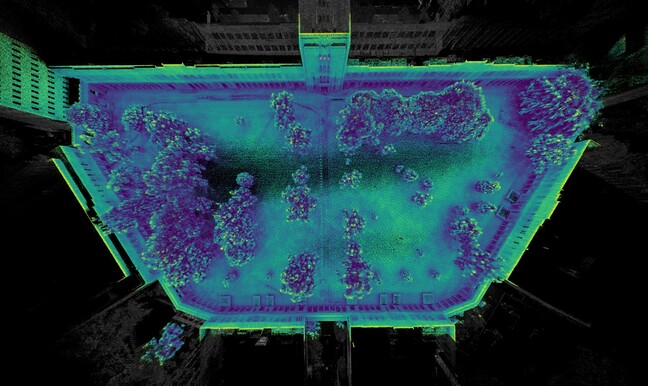

Each team in the current DARPA competition has created its own SLAM solution. The CSIRO team's answer, called Wildcat, looks like a spinning LIDAR sensor placed atop each robot's head. The team wouldn't reveal precisely how Wildcat works, but boasted that it works well in almost all settings – unlike some of its competitors, which are more tailored to pre-defined environments.

But these solutions are not nearly foolproof. LIDAR sensors work by beaming lasers out in all directions and using the feedback to build a map of what surrounds them. They generate an intensity reading that helps them tell the difference between, say, a brick wall and a cloud of smoke. LIDAR, however, is not sufficiently sensitive that robots can always correctly identify an object.

One issue the CSIRO team has had to contend with is when robots identify tall grass as insurmountable solid walls. Sometimes even a few blades of tall grass can stop a robot in its tracks. In other tests, robots have struggled to differentiate between a flat surface of water and solid ground (they look the same to a LIDAR sensor).

Purpose-built automated grippers directed by machine vision can do remarkably delicate and precise work

Another terrain identification error involved spotting the difference between a ramp and a cliff. Spoiler alert: it ended badly.

Minor differences, barely noticeable to the human eye, can also confuse robots.

A 2013 study led by Google researchers found that slight alterations to traffic signs were interpreted in wildly different and sometimes bizarre ways by AI. Alterations such as tiny smears of spray paint or stickers on stop signs could confuse the sensors into seeing a different type of sign.

"You can sit down and code up lots of rules for a robot to follow – that's historically how it was done," explained Professor Stefan Williams of the University of Sydney. "But it's those edge cases where the robots are having to make decisions in unfamiliar situations, or where something has changed, or there is uncertainty and they're unsure of what they're looking at. Those are the situations that are going to give robots trouble. People are very good at reasoning and abstracting based on what they've seen before, whereas robot systems are not particularly good at that."

Even if they could see objects, robots would struggle to grab and manipulate them. Humans can do this easily because we have special nerve endings, called mechanoreceptors, in our skin. These provide our brains information about the shape, feel and weight of objects we handle. Over time, we learn to adapt our grip to various items subconsciously.

Robots need to be programmed with that information. Generally this requires scientists giving robots a set of predefined strategies for handling particular items – for example, when grabbing a cylindrical object, robots are told to hold it by the flat outside edges.

This strategy has been effective. Berkshire Grey, which serves Walmart, Target, and Fedex, uses a system of vacuum grippers that can typically handle about 90 per cent of its customers' stock off the shelf, according to the firm's chief scientist, Matt Mason. Several firms, including Saga Robotics and Xihelm, are piloting fruit-picking robots intended to relieve post-Brexit labour shortages in the UK. Aggeris, a spinout from the University of Sydney, is deploying a robot that can move over crops and detect and pick weeds without damaging produce.

But robotic manipulation is far from perfect. Irregularly shaped or textured items continue to give robots trouble. Produce, for example, is notoriously difficult for robots to handle because it has no standard shape – no two strawberries are precisely the same. Packaging often gives robots trouble as well. A bag of oranges is simple for a human to pick up but often confounds robots because it shifts and moves unpredictably. Items wrapped in slippery clear plastic can confuse cameras and trouble grippers.

"Compared to humans, robots suck at manipulating objects. That's true," says Mason. "But we have to remember that robotics is almost unique in comparing itself against the standard of humans. If you look at robotics away from that standard, they are pretty marvellous tools."

Even if scientists can get robots to handle specific items, broadening out remains a challenge. A laundry-folding robot, for example, might be able to fold queen-sized sheets, but it will struggle with non-standard items such as fitted sheets and strappy dresses, never mind undergarments.

As MIT's latest Future of Work report [PDF] put it last year: "Until recently, robots used traditional forms of two-fingered pincers or single-purpose tools which can pick up objects but risk damaging soft or inconsistent materials.

"More recently, purpose-built automated grippers directed by machine vision can do remarkably delicate and precise work – for example, picking up glazed donuts on an automated bakery line without cracking the shiny coating. But such a gripper might work only on doughnuts. It can't pick up a clump of asparagus or a car tire."

Even if autonomous robots do not attain near-human capabilities, they can still do what boffins call the three Ds: Dull, Dangerous, and Dirty jobs that are better performed by robots rather than humans.

Boston Dynamics' Spot offers a useful example of this approach at Ford, which is using two of the devices to build a detailed computer model of its 200,000 square-metre Van Dyke Transmission Plant in Detroit.

Normally, this process would take dozens of workers weeks to complete and cost around $300,000, but Ford reckons its bots – named Fluffy and Spot – can do it in half the time and at a fraction of the cost. Pharma giant Merk is using Spot to perform safety checks of its thermal exhaust treatment facilities. Singapore enlisted one of the mechanical canines to patrol through parks and bark at visitors if they were not socially distancing (but decided to relieve the hounds from duty after a trial).

To serve man

Until robotics improves, more serious tasks will remain out of reach. "If a robot fails when it is vacuuming your floor, that's not a problem. But if it fails while walking your dog in the park, then you've got yourself some very upset children," Rian Whitton of ABI Research told The Register.

One way forward for now is not to have robots replace human workers, but rather help them. Fanuc, one of the world's largest robot makers, has seen a surge in demand of collaborative robots, commonly known as "cobots", since the pandemic. While most industrial robots are well separated from humans by cages and other barriers, cobots are equipped with force-torque sensors, which limit the speed and force of their movements and makes them safe to interact with humans.

Among many other uses, cobots are being implemented around workers at manufacturing workstations to inspect for faults in the product as it is being built. Robotic exoskeletons, while a significantly smaller market, are being used in settings where heavy lifting is required, such as warehousing and in nursing homes in Japan.

From his discussions with commercial partners, CSIRO's Kottege reckons that "low-level autonomy" is the way forward for now. "They still want a human to be the main decision maker. They still want control over how the mission is specified, but they want robot to automatically avoid bumping into things, not tear up ducts, not get tangled up in cables."

All of which means mass displacement of human workers is not imminent.

Neither is a robot uprising. "I don't think that's going to happen any time soon," answers Kottege with a laugh.

And what if he's wrong? "Go stand behind some tall grass – I think you'll find you'll be just fine." ®