This article is more than 1 year old

If it's going to rain within the next 90 mins, this very British AI system can warn you

Met Office, DeepMind, uni team hope their work will make a splash

Computer scientists at DeepMind and the University of Exeter in England teamed up with meteorologists from the Met Office to build an AI model capable of predicting whether it will rain up to 90 minutes beforehand.

Traditional forecasting methods rely on solving complex equations that take into account various weather conditions, such as air pressure, moisture, and the temperature of Earth’s atmosphere. The trouble is, at least in Blighty, these systems tend to predict what lies in store for us whole days or weeks ahead.

Deep-learning models are better suited for making more near-term forecasts – such as within the next couple of hours – according to a paper published by the aforementioned boffins in Nature on Wednesday. There are advantages to using AI algorithms; they don’t have to solve thermodynamic equations and are less computationally intensive than other predictive techniques.

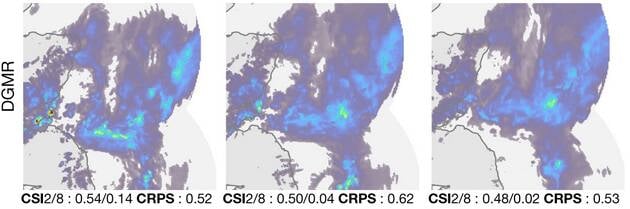

The team led by DeepMind trained a generative adversarial network (GAN) to produce a sequence of maps indicating where it's going to rain. Each of these precipitation maps show where moisture is accruing and moving in the atmosphere, each one covering a region measuring 1,536 × 1,280 km. Millions of examples of these maps were gathered from radar observations from 2016 to 2018. Data gathered in 2019 was reserved for testing.

The model was fed a sequence of map examples, each one capturing weather data over five minute intervals during the training stage. It learned to pick up on common patterns describing how clouds spread in the sky and whether they produced rain or not.

In the testing stage, the system was asked to generate the next series of maps to predict rainfall in five minute intervals up to 90 minutes given four previous examples. Essentially, it’s a bit like feeding a system a short video clip and training it to predict the next frames.

- AI cleans up sat radar images so scientists can better spot warning signs before volcanoes go all Mount Doom

- Here comes an AI that can predict hurricane strength. Don't worry, NASA made it so it probably actually works

- Typical. Crap weather halts work on subsea fibre-optic cable between UK and France

- Trouts on a plane: Utah drops fish into lakes from aircraft and circa 95% survive

Its performance is judged using a number of factors, including how smooth and gradual the changes between the frames or maps were. Instead of calculating a straightforward method of determining the model’s accuracy, the team relied on asking a panel of 50 expert meteorologists to rank the predictive maps produced by the GAN and compared these with the maps produced from other types of more traditional numerical weather prediction systems.

“Using a systematic evaluation by more than 50 expert meteorologists, we show that our generative model ranked first for its accuracy and usefulness in 89 per cent of cases against two competitive methods,” the paper stated.

But how accurate is the model really? It’s difficult to say, Niall Robinson, co-author of the study and head of partnerships and product innovation at the Met Office, told The Register.

We decided that in some senses, the purest way to see if our approach had value was to simply ask the end users – our own meteorologists. In a blind study, they overwhelmingly preferred our new approach to other algorithms

“Machine learning algorithms generally try and optimise for one simple measure of how 'good' it’s prediction is. However, weather forecasts can be good or bad in lots of different ways; perhaps one forecast gets precipitation in the right location but at the wrong intensity, or another gets the right mix of intensities but in the wrong places, and so on. Ultimately, 'good' depends on what quality happens to be useful to whatever problem is at hand.

"We went to a lot of effort in this research to assess our algorithm against a wide suite of metrics, so we showed it was 'good' in several different ways. Moreover, we decided that in some senses, the purest way to see if our approach had value was to simply ask the end users – our own meteorologists. In a blind study, they overwhelmingly preferred our new approach to other algorithms. To our knowledge, this kind of really comprehensive assessment of multiple different kinds of 'good' hasn’t been done before to assess the use of AI in meteorology. I think it’s quite unusual in machine learning research full stop."

The research project is more of a proof-of-concept effort at the moment, and the Met Office won’t be using AI algorithms in real-world forecasting any time soon. There are all sorts of other tools the weather agency has to build before something like a GAN can be used, Robinson said:

“Once a new bit of useful research is published, there is still a lot of work to do to create an operational service. For instance, we need to carefully consider how new tools are deployed and maintained, the best user interfaces for our meteorologists, and how it fits in with all the other forecasts we provide. That way, we can ensure we provide the reliability that is at the core of the Met Office purpose.”

The Met Office said it’s exploring solving other AI research problems with the help of industry and academia. ®