This article is more than 1 year old

Italian researchers' silver nano-spaghetti promises to help solve power-hungry neural net problems

Back-to-analogue computing model designed to mimic emergent properties of the brain

Researchers in Italy have developed a physical system to mimic properties of human brains that they hope will massively reduce the power costs of neural networks fundamental to AI development.

Successful approaches to neural networks have largely depended on software representations of brain synapses on top of a conventional stack of digital computing hardware and software.

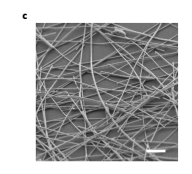

However, a paper published in Nature Materials this week shows that neural networks can be built using analogue computing based on a physical mesh of silver nanowires which, when viewed under an electron microscope, look rather appropriately like a plate of spaghetti.

Scanning electron microscopy image of a highly interconnected memristive nanowire network reservoir (scale bar, 2 μm) Image: Milano et al

Nodes between the wire are "memristive" in nature. The resistive switching mechanism at the nanowire junctions is modulated by the formation/rupture of a silver conductive path across the nanowire shell layer, under the action of the applied electric field.

Gianluca Milano, a post-doctoral researcher at Istituto Nazionale di Ricerca Metrologica in Torino, told The Register the main goal was to massively reduce the number of training parameters needed to get neural networks to make sense of input data.

"For your natural networks, the most expensive part, in terms of power costs, is training: usually you have thousands of parameters that you have to train. And this is the root of the AI power consumption problem," he said.

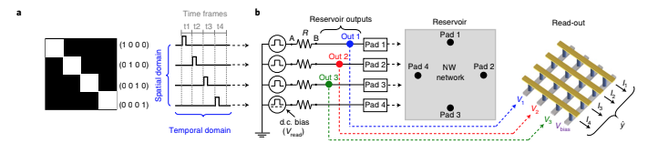

The researcher's response was to divide computation into two parts. In the first, the input is processed by means of short-term memory, which is taken care of by the physical reservoir, which does not have to be trained. Only long-term memory requires training in terms of "a fine-tuning of parameters," Milano said.

"In this sense, you can reduce the number of parameters that you have to train by a lot, and also you can simplify the hardware required," he said.

Using the approach, a 4x4 training input grid would only required three training parameters, rather than 16, the paper shows.

- University, Nvidia team teaches robots to get a grip with OpenAI's CLIP

- Intel offers Loihi 2 to boffins: A 7nm chip with more than 1m programmable neurons

- If it's going to rain within the next 90 mins, this very British AI system can warn you

- US drug watchdog green-lights first prostate-cancer-predicting AI software

The system was trained to recognise handwritten numerals from 0 to 9, with another benchmark being the prediction of the Mackey–Glass time series, originally developed to model the variation in the relative quantity of mature cells in the blood – and considered difficult to predict with "conventional machine-learning algorithms".

The study is part of a trend looking into neuromorphic computing, which takes direct inspiration from brain structures and physics, rather than modelling these processes on a conventional computing stack.

From the paper: Fully memristive reservoir computing implementation and spatio-temporal evolution of the nanowire network reservoir state (click to enlarge) Image: Milano et al

The principle demonstrated in the paper promises a reduction in power consumption of neural networks by several orders of magnitude.

Fellow report author Carlo Ricciardi, associate professor at Politecnico di Torino, said that in simulated neural networks, the energy cost was around 1 milli-joule per synaptic event. The energy required for each biological brain synaptic event was to the order of 10 to the -13 Joules. The research suggests systems might be built requiring 10 times that amount.

It's not quite the efficiency — and a long way from the scale — of the human brain. But perhaps an interesting step in the right direction. ®