This article is more than 1 year old

Google focuses Lens on combined image and text searching

Want that thing, but in a different color, and don't know the name? Multisearch has your back

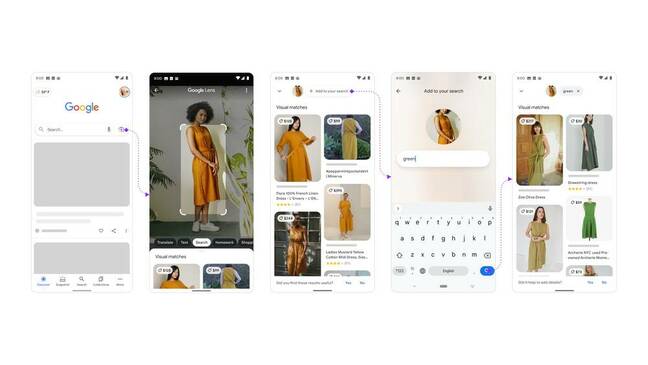

Google's latest feature is making its Lens visual search tool mingle with text for image searches for those difficult-to-describe vague queries.

That may not sound like a big deal, but what Google calls Multisearch "a feat of machine learning" could change the way people perform some of the most common searches and take a bite out those moments when you know the rough idea of what you're looking for but lack a suitable way to define it in text or image searches alone.

Retailers will salivate over the vague search option, for one, as highlighted by Belinda Zeng, Google Search product manager, who described several examples of how Multisearch could work: "How many times have you tried to find the perfect piece of clothing, a tutorial to recreate nail art or even instructions on how to take care of a plant someone gifted you – but you didn't have all the words to describe what you were looking for?"

Multisearch would address all of those situations: a photo of a dress could be queried with the name of a preferred color, photos of a finished set of nails could be searched with the phrase "how to," and unknown plants can be Googled with "care instructions."

In each of those cases, Google Search is addressing multiple unknowns: what the object in the image is, how the text request relates to the image, and how to combine the two into what the user wants.

In short, what you need is an expert on those particular subjects with the skill to infer, based on what it's presented with, the best course of action.

Say hi to MUM

Multisearch is possible thanks to Google's Multitask Unified Model, or MUM. MUM is an improvement on BERT, Google's previous AI language model released in 2019. BERT improved Google search results by 10 percent for English speakers, Google said. MUM, it added, is 1,000 times more powerful than BERT.

Google's Pandu Nayak described the difference between BERT and MUM using the planning of a hiking trip as an example: ask an expert how to prepare for a hike and they'll take all sorts of things into account.

- Google makes outdated apps less accessible on Play Store

- Google Cloud previews new BigLake data lakehouse service

- Boeing spreads bets with AWS, Google, Microsoft trio

- Google snubs South Korea's app store payments law

"Today, Google could help you with this, but it would take many thoughtfully considered searches – you'd have to search for the elevation of each mountain, the average temperature in the fall, difficulty of the hiking trails, the right gear to use, and more," Nayak said.

BERT is what you'd use to make multiple searches (Nayak said eight on average for a query of that level of complication), but MUM is designed to respond like the aforementioned professional. "MUM could understand you're comparing two mountains, so elevation and trail information may be relevant. It could also understand that, in the context of hiking, to 'prepare' could include things like fitness training as well as finding the right gear," Nayak said.

As with all things search, Google isn't providing specifics about its algorithms, so the inner workings of MUM and its Multisearch capabilities are likely to remain a mystery. Google said that Multisearch is available in beta through its iOS and Android apps in the US only, though as of writing it doesn't appear to be available in the iOS version of the app.

Google said MUM-powered Multisearch currently works best for shopping-related searches (e.g. drapes with pattern X, a dress in a different color, etc.). Other searches will work, but Google's not vouching for their effectiveness. We've asked Google if it can explain the technology behind Multisearch and future applications in more detail.

In calendar 2021, 'Google Search and other' brought in $43.3 billion in revenue, up from $31.9 billion in the prior twelve months. The entire company turned over $75.3 billion for the year. ®