This article is more than 1 year old

OpenAI test drives caption-to-image-generating DALL·E 2

It paints, it edits, it potentially misleads people with faked pictures

OpenAI has provided its latest caption-to-image-generation model, dubbed DALL·E 2, to select users to test before it potentially opens up the technology for wider use.

Named after the surrealist artist Salvador Dali and the Pixar robot character Wall-E, the model's predecessor, DALL·E, was launched last year. This software is capable of creating images in various artistic styles when guided by text inputs: it generates pictures from what you describe to it. You ask for an anatomically realistic-looking heart, or a cartoon of a baby daikon radish in a tutu walking a dog, and it'll try its best to make an image matching that.

The newer version, DALL·E 2, is said to be more versatile and capable of generating images from captions at higher resolutions across more artistic styles. It also comes with new abilities, too.

An example of DALL·E 2's output ... An image generated from the prompt: An astronaut lounging in a tropical resort in space in a vaporwave style.

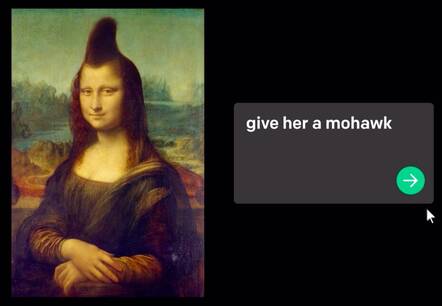

Users can now automatically edit a supplied image using the so-called in-painting function: tell DALL·E 2 what to add or change, and it'll try to make that edit. For example, highlighting the space above the Mona Lisa's head and typing "give her a mohawk" will superimpose an AI-generated spiky haircut onto the image of the famous painting.

DALL·E 2 is powered by a 3.5-billion-parameter model trained on countless pairs of images and captions scraped from the internet. It learns the relationship between visual concepts and descriptive text. A separate 1.5-billion-parameter model is used to increase the resolution of its digitally-created images. DALL·E 2 generates images using a process called diffusion, where patterns of random dots are added and changed over time as it is guided to a particular vision.

The software could help people touch up their pictures, create art, or churn out endless stock images. "DALL·E 2 is a research project which we currently do not make available in our API," OpenAI said on Wednesday. "As part of our effort to develop and deploy AI responsibly, we are studying DALL·E's limitations and capabilities with a select group of users."

All testers have to do is provide to the system a text description of the image they want to create. DALL·E 2 is better at rendering realistic scenes, and can add shadows, reflections, and textures to pictures more appropriately than its predecessor. There are examples of its output in a technical paper [PDF] accompanying this week's announcement.

- Microsoft, OpenAI method could make training large neural networks cheaper

- Google uses deep learning to design faster, smaller AI chips

- Google talks up its 540-billion-parameter text-generating AI system

- DeepMind AI tool helps historians restore ancient texts

The newer model still struggles with producing fine-grained details in more complex scenes. And there's a downside to its versatile and creative abilities: it provides a simple way to mass produce potentially misleading faked photos.

"Our model is capable of producing fake but realistic images and enables unskilled users to quickly make convincing edits to existing images," an OpenAI team wrote in another paper, updated last month. "Additionally, since the model's samples reflect various biases, including those from the dataset, applying it could unintentionally perpetuate harmful societal biases."

To minimize potential harm, the team tried to scrub clean this training dataset – "several hundred million images from the internet" – by removing photos of real people, weapons, swastikas, confederate flags, NSFW content, and so on.

"Our content policy does not allow users to generate violent, adult, or political content, among other categories. We won't generate images if our filters identify text prompts and image uploads that may violate our policies. We also have automated and human monitoring systems to guard against misuse," the startup warned separately. ®