This article is more than 1 year old

How to select the optimal container storage

Huawei expert discusses how containers make data storage more intelligent

Advertorial The article is attributed to Zhao Lizhi, data storage expert for Huawei ICT Products and Solutions.

Enterprises are reducing their application development lifecycles to meet changing user demands, a trend which is in turn driving adoption of microservices application architectures. And in the cloud-native era, they usually turn to Kubernetes containers (K8s) for the job.

Created in 2014, Kubernetes is the portable, extensible open source platform which was built to manage containers on a large scale. It also supports application extensions and failover, and allows containers to be utilized in production, which has helped to promote container development.

A survey published by the Cloud Native Computing Foundation (CNCF) in March 2022 concluded that 96% of enterprises are using or evaluating K8s. Those companies usually start by running stateless applications, such as web services, on containers. But as the technology has developed and IT departments have become more familiar with its benefits, usage has extended to stateful applications including databases and middleware. The CNCF found that nearly 80% of customers plan to run stateful applications on containers.

To store the data created by those stateful applications on persistent disk, the K8s storage interface has been separated from subsequent K8s version releases. It now operates as an independent storage interface standard - the Container Storage Interface (CSI) – for which many vendors have developed and released CSI plug-ins.

The result is that CSI-based storage can be integrated into containers to enable K8s to directly manage storage resources, including basic tasks like create, mount, delete, and expand as well as advanced operations such as snapshot, clone, QoS, and active-active deployments.

Container storage classification

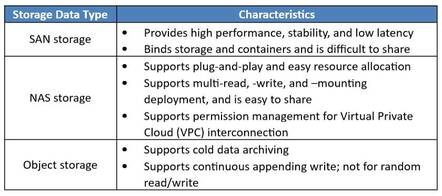

Based on stored data types, container storage can be classified into SAN, NAS, and object storage, each of which is best suited to different environments.

Matching storage to containerized applications

At the early stages of container evolution, enterprises used local disks to store database applications like MySQL. At that time, data volumes were small and containerized applications were not mission-critical so investment was limited, despite the fact that these databases needed high performance and availability, as well as low latency.

Local disks are no longer preferred however, largely because they don't support container failover in case of a node exception, forcing manual interventions which can take several hours. As well as poor availability and high maintenance, local disk storage is also impacted by resource isolation - different servers are stored in different places leading to difficulties sharing the storage resource pool and inefficient capacity utilization.

SAN storage offers superior performance, but fails to satisfy the high-availability needs of the database. Because it's bound to the containers of a failed node, it cannot automatically fail over in this case, so manual intervention is again required.

Some customers which are using MySQL databases have deployed enterprise-level NAS storage to eliminate this problem. Automatic failover is possible in this case because NAS supports multi-mounting. If a node is faulty and the containers fail over, they can be remounted on the destination drive. Data stored on NAS is also shared in multiple locations and does not need to be copied in failover scenarios. As such, recovery times are reduced to minutes, and availability can see as much as a 10X improvement. Storage utilization is also optimized because shared NAS capacity offers an overall TCO which is up to 30% lower than equivalent local disk storage solutions.

New applications such as AI training can demand random read/writes from billions of unstructured files with sizes ranging from several KBs to several MBs. They need concurrent access to dozens and even thousands of server GPU resources to run, and the underlying storage needs low latency to accelerate GPU response times and boost GPU utilization.

SAN storage is insufficient here because it doesn't allow data to be shared among large scale clusters which comprise thousands of compute servers. Equally, object storage performs poorly with random read/writes and only supports sequential read/writes of cold data retained for long periods, but seldom accessed, in archiving applications.

NAS storage circumvents limitations

NAS storage supports multi-node sharing and is the only applicable storage option in this scenario. A common option for enterprises sees them implement a distributed NAS solution based on Ceph/GlusterFS with local server disks for example, where data is spread across multiple nodes. But it's important to note that network latency issues may impact its performance.

Huawei NAS enterprise storage can multiply the performance of this solution by several times using the same Ceph/GlusterFS configuration. For example, a large commercial bank uses Ceph distributed storage with local server disks, but the system supported only 20,000 IOPS in AI applications during a test. After replacing the its existing storage system with Huawei OceanStor Dorado NAS all-flash solution, the bank saw dual-controller performance easily reach 400,000 IOPS, a 20-fold increase in AI analysis efficiency.

The Huawei OceanStor Dorado delivers leading NAS performance and reliability for workloads which process large numbers of small files. It uses a globally shared distributed file system architecture and intelligent balancing technology to support file access on all controllers, eliminate cross-core and -controller transmission overheads and reduce network latency.

The intelligent data layout technology can accelerate file location and transmission. OceanStor Dorado has demonstrated 30% better performance for applications relying on small file input/output (I/O) compared to the industry benchmark, enabling enterprises to deal with large volumes of small files stored in containers with ease.

It offers five levels of reliability covering in disk, architecture, system, solution, and the cloud. Techniques include Active-Active NAS access which operates two storage controllers simultaneously to deliver fast failover and minimum interruption to NAS services in the event of one failing. All of that adds up to 7-nines reliability for always-on services and containerized data which is always available. A more detailed description of the Huawei OceanStor Dorado NAS all-flash storage system is available here.

Because containers may run on any server within a cluster and fail over from one server to another, container data needs to be shared among multiple nodes. So container storage solutions have to share data while handling concurrent random read/writes generated by high volumes of small files, particularly when it comes to supporting new application development. At the end of day, a Kubernetes container can be summarized as an application-oriented environment that stores its data in files, which is what makes the Huawei OceanStor Dorado NAS all-flash storage system a good choice for the job.

Sponsored by Huawei.