This article is more than 1 year old

Google teaches robots to serve humans – with large language models the key

Is this it? The robo-butler dream is coming true?

Video Google's largest AI language model is helping robots be more flexible in understanding and interpreting human commands, according to the web giant's latest research.

Machines typically respond best to very specific demands – open-ended requests can sometimes throw them off and lead to results that users didn't have in mind. People learn to interact with robots in a rigid way, like asking questions in a particular manner to get the desired response.

Google's latest system, dubbed PaLM-SayCan, however, promises to be smarter. The physical device from Everyday Robots – a startup spun out of Google X – has cameras for eyes in its head and an arm with a pincer tucked behind its long straight body, which sits on top of a set of wheels.

You can watch the robot in action in the video below:

Asking the robot, something like "I just worked out, can you get me a healthy snack?" will nudge it into fetching an apple. "PaLM-SayCan [is] an interpretable and general approach to leveraging knowledge from language models that enables a robot to follow high-level textual instructions to perform physically-grounded tasks," research scientists from Google's Brain team explained.

Google introduced its largest language model PaLM in April this year. PaLM was trained on data scraped from the internet, but instead of spewing open-ended text responses the system was adapted to generate a list of instructions for the robot to follow.

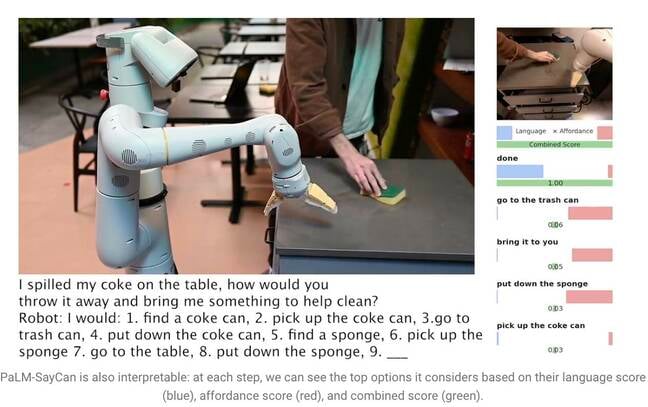

Saying "I spilled my Coke on the table, how would you throw it away and bring me something to help clean?," prompts PaLM into understanding the question and generating a list of steps the robot can follow to complete the task, like going over to pick up the can, throwing it into a bin, and getting a sponge.

Large language models (LLMs) like PaLM, however, don't understand the meaning of anything they say. For this reason, the researchers trained a separate model using reinforcement learning to ground abstract language into visual representations and actions. That way the robot learns to associate the word "Coke" with an image of a fizzy drink can.

PaLM-SayCan also learns so-called "affordance functions" – a method that ranks the possibility of completing a specific action given objects in its environment. The robot is more likely to pick up a sponge than a vacuum cleaner, for example, if it detects a sponge but no vacuum near it.

"Our method, SayCan, extracts and leverages the knowledge within LLMs in physically-grounded tasks," the team explained in a research paper. "The LLM (Say) provides a task-grounding to determine useful actions for a high-level goal and the learned affordance functions (Can) provide a world-grounding to determine what is possible to execute upon the plan. We use reinforcement learning (RL) as a way to learn language conditioned value functions that provide affordances of what is possible in the world."

- Google's DeepMind says its AI coding bot is 'competitive' with humans

- Amazon buys Roomba maker iRobot for $1.7b

- Google says it would release its photorealistic DALL-E 2 rival – but this AI is too prejudiced for you to use

- Google helps develop AI-driven lab machine to diagnose Parkinson's

To prevent the robot from veering off task, it is trained to select actions from 101 different instructions only. Google trained it to adapt to a kitchen – PaLM-SayCan can get snacks, drinks, and perform simple cleaning tasks. The researchers believe LLMs are the first step in getting robots to perform more complex tasks safely given abstract instructions.

"Our experiments on a number of real-world robotic tasks demonstrate the ability to plan and complete long-horizon, abstract, natural language instructions at a high success rate. We believe that PaLM-SayCan's interpretability allows for safe real-world user interaction with robots," they concluded. ®