This article is more than 1 year old

Nvidia claims 'record performance' for Hopper MLPerf debut

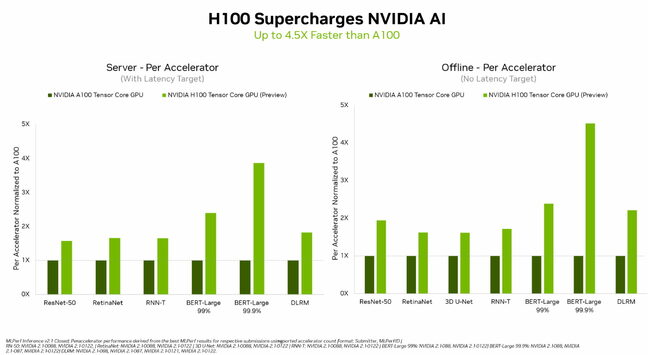

H100 Tensor Core GPU leaves A100 in the dust, but company says previous gen has improved too

Nvidia's Hopper GPU has turned in its first scores in the newly released MLPerf Inference v2.1 benchmark results, with the company claiming new records for its performance.

MLCommons analyzes the performance of systems performing inferencing tasks using a machine learning model against new data. The results are available here.

Nvidia tends to dominate the results, and this time the company said that its Hopper-based H100 Tensor Core GPUs set new records in inferencing workloads, claiming it delivers up to 4.5x more performance than previous GPUs.

The tests are effectively the first public demonstration of the H100 GPUs, which are set to be available later this year..

In this case, the previous GPU referred to appears to mean Nvidia's A100 product, which has been widely deployed in many AI and HPC systems over the past year or so. Hopper delivered improved per-accelerator performance across all six neural network models, and Nvidia claimed it represents the leadership position for both throughput and speed in separate server and offline scenarios.

- US bars Nvidia and AMD from selling AI-centric accelerators to China and Russia

- What are Nvidia and AMD doing getting involved with SmartNICs and VMware?

- Nvidia will unveil next-gen GPU architecture in September

- Broadcom challenges Nvidia's Spectrum-4 with 51.2T switch silicon

However, while the A100 products may no longer be Nvidia's hottest AI platform, the company said these have continued to show gains in performance thanks to continuous improvements in Nvidia's AI software. It claimed the MLPerf figures have advanced by 6x since the A100 was first listed in the results two years ago.

Nvidia also submitted results for its Orin edge computing platform, which integrates Arm cores with an Ampere architecture GPU. The company claimed it came out on top in more tests than any other low-power SoC, and exhibited a 50 percent gain in energy efficiency from its debut results on MLPerf in April.

MLCommons said this round of MLPerfTM Inference v2.1 figures established new benchmarks with about 5,300 performance results submitted and 2,400 power measures, both up from the last set of results published.

Not surprisingly, Nvidia approves of MLPerf, saying companies participate in the tests because it is a valuable tool for vendors and customers evaluating AI platforms. Not everyone agrees it is the best way to measure machine learning performance, however.

Earlier this year, rival benchmark organization SPEC announced it had formed a committee to oversee the development of vendor-agnostic benchmarks for machine learning training and inference tasks. The organization said it intended to come up with benchmarks that will better represent industry practices than existing benchmarks such as MLPerf. ®