This article is more than 1 year old

This app could block text-to-image AI models from ripping off artists

Free software looming to fend off paintbrush-armed robots

Researchers have developed a technique aimed at protecting artists from AI models replicating their styles after having been trained to generate images from their artwork.

Commercial text-to-image tools that automatically produce images given a text description like DALL-E, Stable Diffusion, or Midjourney have ignited a fierce copyright debate. Some artists were dismayed to find out how shockingly easy it was for anyone to create new digital artworks mimicking their style.

Many have spent years perfecting their craft only to see other people generate images inspired by their work in seconds using these tools. Companies developing text-to-image models often scrape data used to train these systems on the internet without explicit permission.

Artists are currently embroiled in a proposed class-action lawsuit against AI startups Stability AI, Midjourney, and online art platform DeviantArt, claiming they infringed on copyright laws by unlawfully stealing and ripping off their work.

Creators could protect their intellectual property from image generation tools in the future using new software developed by computer science researchers at the University of Chicago. The program, dubbed Glaze, prevents text-to-image models from learning and mimicking the artwork styles in images.

First, the software inspects a picture and figures out what visual details define its qualities. Traditional oil paintings, for example, will contain fine brushstrokes, whilst cartoon drawings will have more exaggerated shapes and colour palettes. Next, these features are altered by applying an invisible "cloak" over the image.

We don't need to change all the information in the picture to protect artists, we only need to change the style features," Shawn Shan, a graduate student and co-author of the study, said in a statement. "So we had to devise a way where you basically separate out the stylistic features from the image from the object, and only try to disrupt the style feature using the cloak."

The cloak is, in fact, a style transfer algorithm that applies another image's likeness onto the features extracted from the program. Glaze basically remixes the original appearance of an image with another style so that an AI model trained on the image fails to capture its essence effectively.

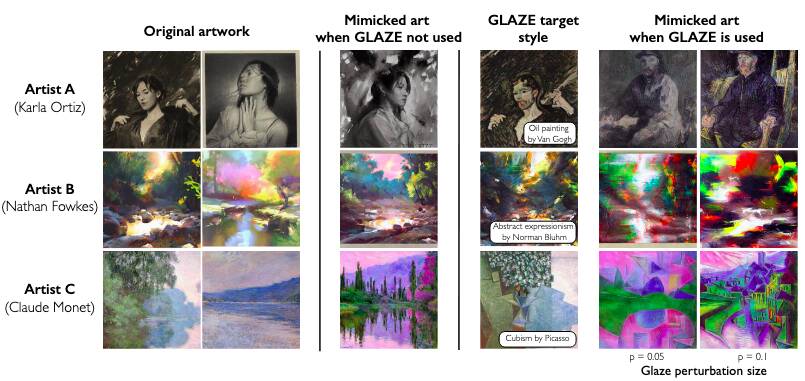

Below's an example of artwork from three artists, Karla Ortiz, Nathan Fowkes, and Claude Monet that have been cloaked with different styles from Van Gogh, Norman Bluhm, and Picasso.

The columns on the right show how much an image can change using the Glaze program, with the left column altered less than the right column

"We're letting the model teach us which portions of an image pertain the most to style, and then we're using that information to come back to attack the model and mislead it into recognizing a different style from what the art actually uses," Ben Zhao, co-author of the research and a computer science professor, said.

The changes made by Glaze don't affect the appearance of the original image much, but are interpreted differently by computers. The researchers are planning to release the software for free so artists can download and cloak their own images before they upload them to the internet, where they could be scraped by developers training text-to-image models.

You can sign up here to a mailing list through which releases will be announced.

- Midjourney, DeviantArt face lawsuit over AI-made art

- So you want to replace workers with AI? Watch out for retraining fees, they're a killer

- Adobe will use your work to train its AI algorithms unless you opt out

- Adobe to sell AI-generated images on its stock photo platform

They warned, however, that their software doesn't solve AI copyright concerns. "Unfortunately, Glaze is not a permanent solution against AI mimicry," they said. "AI evolves quickly, and systems like Glaze face an inherent challenge of being future-proof. Techniques we use to cloak artworks today might be overcome by a future countermeasure, possibly rendering previously protected art vulnerable."

"It is important to note that Glaze is not a panacea, but a necessary first step towards artist-centric protection tools to resist AI mimicry. We hope that Glaze and followup projects will provide some protection to artists while longer term (legal, regulatory) efforts take hold," they concluded. ®