This article is more than 1 year old

Intel successfully ships an updated datacenter roadmap

What we may well see in 2023 and beyond

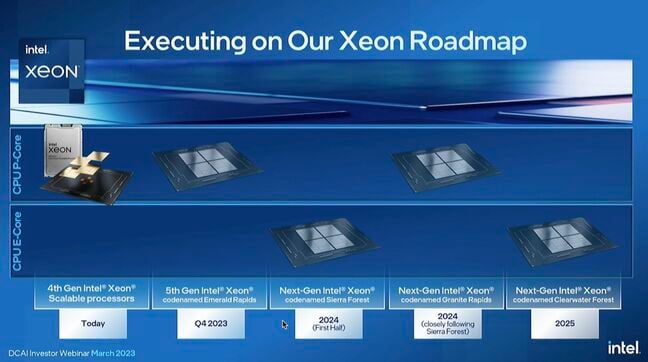

Intel delivered an update to its datacenter and AI roadmap Wednesday in which executives promised the delays that have plagued its Sapphire Rapids CPU launch were behind them.

"Our 4th-gen Xeon is high quality and ramping strong, and we're getting back to schedule predictability with 5th-gen Xeon, which we'll ship in Q4 of this year," Sandra Rivera, who leads Intel's Data Center and AI Group, said on the investor call.

However, the word of the day was clearly "healthy." Throughout the call, Intel's Lisa Spelman, VP of Intel's Xeon business, repeatedly strutted out demos in which she highlighted how "healthy" each of the company's next-gen chips were.

Intel's eagerness to communicate the health of its silicon roadmap is hardly surprising. The company's 4th-Gen Sapphire Rapids Xeons, were a point of embarrassment for the company. Originally slated for release in 2021, the parts suffered ongoing delays and were finally released this January.

The more pressing issue for Intel, however, is it's just weeks away from delivering Q1 earnings. And by its own admission, they're most likely going to be bad. During Intel's Q4 earnings call in January, execs warned investors that the company could see revenues fall by as much as 42 percent year over year during the quarter.

Intel pits e-cores against Arm, AMD

On yesterday's call Intel shared new details about its efficiency-core toting Sierra Forest Xeons. The chip will use the company's Intel 3 manufacturing process — second-gen 7nm — and pack up to 144 e-cores into a single CPU package.

Intel's e-cores have been kicking around for a few years now, initially debuting alongside its Alder Lake desktop chips in 2021. However, unlike those chips which paired up a bunch of fast, performance cores with smaller, efficiency cores, Sierra Forest is all about the e-cores.

According to Rivera, the core-optimized Xeons are aimed at a variety of high-density compute applications favored by hyperscalers and are designed to compete against Arm-based chips developed by Amazon and Ampere.

While the Arm chips have seen widespread success in the cloud in recent years, the argument in Intel's favor is, because Sierra Forest is x86, the chip works just like any other Intel CPU — no code to refactor and, for the most part, no application compatibility issues to worry about.

The rub for Intel is that it's already behind bringing such a chip to market. Slated for release in the first half of 2024 — if Intel can actually stay on schedule — Sierra Forest will launch roughly a year behind AMD's 128-core Bergamo parts, which are due out in the first half of this year and target the same segment.

Intel plans to follow Sierra Forest up in 2025 with new core-optimized Xeon called Clearwater Forest, which will be the first chip to use its 18A — equivalent to 1.8nm — process tech.

Emerald Rapids still on track for 2023

Intel fans won't have to wait until 2024 for higher core count Xeons, however. The company says it's on track to deliver a refresh of its Sapphire Rapids parts in Q4 2023.

Emerald Rapids is a refinement on Intel's current Xeon Scalable platform and will be drop-in compatible for customers willing to go through the process of swapping their CPUs.

While Rivera didn't offer much in terms of details about the chip, she did say it would offer higher core counts and better performance per watt than its predecessor.

"Silicon is coming out of our factories at very high quality; volume validation is well underway; and we're sampling the products to customers today," Rivera said of the platform.

- Intel pours Raptor Lake chips into latest NUC Mini PC line

- Intel bumps up core counts for 13th-gen vPro chips

- Nvidia CEO promises sustainability salvation in the cult of accelerated computing

- Industrial design: AMD brings 4th gen Epyc power to embedded applications

Intel promises special 8800MTps memory for Granite Rapids

But just like Sapphire Rapids, Intel's roadmap has the follow-up to Emerald Rapids right around the corner. Intel says it's on track to debut its next performance-optimized Xeon code named Granite Rapids shortly after the launch of Sierra Forest. So clearly Intel is giving itself a little wiggle room with its latest roadmap.

"Granite Rapids delivers several improvements compared to the previous generations, including increased core counts, improved performance per watt, and faster memory and IO innovations," Rivera said.

It's the latter point that Intel was keen to highlight during a brief demo, in which Spelman showed off an early Granite Rapids system running a special memory DIMM capable of 8800MTps. That's nearly twice the speed of modern DDR5 memory available on server platforms today.

"This boost in bandwidth is critical for feeding the fast growing core counts of modern CPUs and ensuring that your cores can be efficiently utilized," Spelman said.

This of course, isn't a new problem, and is something AMD, which still holds a core-count lead over its rival, knows well. To feed all 96 cores on its Epyc 4 processors announced last November, AMD increased the number of memory channels from eight to 12, boosting the effective memory bandwidth by roughly 50 percent.

Habana for AI, GPU Max for HPC, and Xeon for everything in between

In addition to Intel's Xeon roadmap, Rivera also offered some clarity on the company's AI strategy following the departure of Accelerated Computing Group lead Raja Koduri and the cancellation of the Rialto Bridge GPU family earlier this year.

According to Rivera, Intel is positioning its Habana Gaudi2 accelerators for AI deployments, particularly those involving large language models like ChatGPT.

Meanwhile, Intel's GPU Max family — formerly Ponte Vecchio — is aimed more at high-performance compute applications. The chip is at the heart of the long-delayed Aurora supercomputer under development at Argonne National Labs. That chip will be superseded by a combined CPU-GPU part codenamed Falcon Shores XPU. However, the chip won't launch until sometime in 2025, long after AMD's MI300 APUs reach the market.

Finally, for smaller AI inferencing workloads — which Intel defines as 10 billion parameters or smaller — the company says the AMX accelerators baked into its Sapphire Rapids Xeons are more than up to the challenge.

However, Intel emphasizes that these designations aren't fixed. The chips share a common software interface; workloads can be deployed on any one of these architectures. ®

For more insight and analysis... Check out our friends at The Next Platform, who dived further into Intel's roadmap.