Google's here to boost your cloud security and the magic ingredient? AI, of course

Send in the LLMs

RSA Conference Google Cloud used the RSA 2023 conference to talk about how it's injected artificial intelligence into various corners of its security-related services.

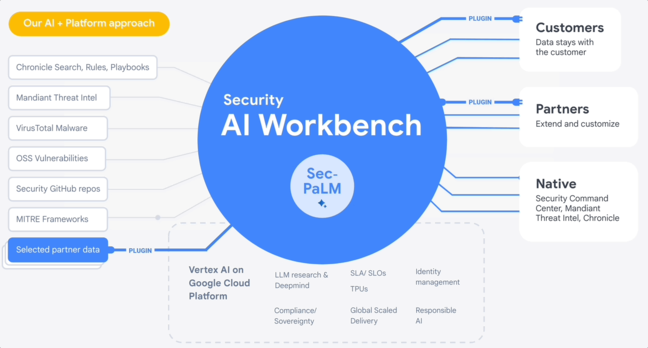

The web giant's announcement of the resulting new features – marketed under the Google Cloud Security AI Workbench umbrella brand – is pretty long winded, so we thought we'd ask its Bard chat bot to summarize it all. Here's what the thing told us:

Google Cloud Security AI Workbench is a new platform that uses generative AI to help organizations secure their cloud environments.

Sec-PaLM, a specialized LLM, powers the Security AI Workbench.

The Security AI Workbench can be used to:

- Analyze security findings and identify possible attack paths.

- Generate human-readable explanations of how an adversary may try to attack.

- Describe cloud-specific steps to remediate security issues.

- Simulate attack paths to help organizations understand where their cloud environment is most at risk.

The Security AI Workbench is available now in preview.

Um, okay, that kinda makes sense, but we're still not sure what exactly is new here. Maybe the diagram Google handed out about the workbench will help?

Fine, we'll read and summarize the announcement ourselves. Here's what's new and worth highlighting from Google Cloud:

VirusTotal and Sec-PaLM

Google said it has created a security-specialized large language model called Sec-PaLM that it's put to use in VirusTotal, which Google also owns. When you upload malware to VirusTotal to analyze, it will use Sec-PaLM to generate a written report (like this one) describing what the file's code will do if executed and what the intent appears to be.

So far this Code Insight feature works on qualifying PowerShell scripts, and this is expected to be expanded to other file formats.

Mandiant Breach Analytics for Chronicle

Next, Google said its Mandiant Breach Analytics for Chronicle will alert you when it detects an intrusion, and will use Sec-PaLM to describe these security breaches.

Diving deeper into the announcement reveals the LLM can be used to search and analyze security event logs, set up and customize the detection of malicious or suspicious activity on a network, and produce summaries and insights.

It essentially brings Google-owned Mandiant's threat intelligence tech into Chronicle, Google's cloud security suite.

Assured Open Source Software

Google also promised to somehow use LLMs to add more packages to its Assured Open Source Software project, which Google uses to avoid supply-chain attacks, and suggests you also make use of it.

Dependencies in AOSS are expected to be free from tampering, obtained from vetted sources, fuzzed and analyzed for vulnerabilities, and include useful metadata about their contents. The idea being that it's a place to get software from without worrying if someone's secretly slipped bad stuff into a library.

Mandiant Threat Intelligence AI

It's Sec-PaLM again, this time in Mandiant Threat Intelligence AI, which can be used to "quickly find, summarize, and act on threats relevant to your organization," we're told.

Security Command Center AI

Finally, Security Command Center AI promises to make it easier for users to understand how their organizations can be attacked, by summarizing and explaining the situation.

Crucially, it doesn't appear to use hypothetical examples, it instead takes a look at your assets and resources, and tells you how someone could take a crack your IT environment specifically. It also recommends mitigations, Google said.

This is sorta more like the AI future we imagined, not chat bots fabricating people's biographies.

Context

Interestingly enough, Google says customers can build plugins to reach into the platform and extend its functionality in customized ways. There's also the usual promise that any customer-supplied or customer-owned data won't end up in the hands of others.

"Google Cloud Security AI Workbench powers new offerings that can now uniquely address three top security challenges: threat overload, toilsome tools, and the talent gap," gushed Sunil Potti, veep of Google Cloud Security, in a statement on Monday.

"It will also feature partner plug-in integrations to bring threat intelligence, workflow, and other critical security functionality to customers."

- SentinelOne sticks generative AI into its stuff because 2023 gotta 2023

- Department of Homeland Security bets on AI to help handle China

- ChatGPT fans need 'defensive mindset' to avoid scammers and malware

- ChatGPT creates mostly insecure code, but won't tell you unless you ask

What Google's announced today is being seen as a response to the OpenAI-powered Security Copilot Microsoft launched last month. What's funny is that years ago the Google Brain team invented the transformer approach now used by all of these modern LLMs, and so the Big G today finds itself in the weird situation of seemingly playing catch up on technology it was or is at the forefront of.

"We need to first acknowledge that AI will soon usher in a new era for security expertise that will profoundly impact how practitioners 'do' security," Potti added. "Most people who are responsible for security — developers, system administrators, SRE, even junior analysts — are not security specialists by training."

Accenture is the first guinea pig for the Google Cloud Security AI Workbench, we're told. For the rest of us, Code Insight is available now in preview form, and the rest will roll out gradually to testers and in preview this year, if all goes to plan. ®