This article is more than 1 year old

Think your smartwatch is good for warning of a heart attack? Turns out it's surprisingly easy to fool its AI

Better ask the human doc in the room

Neural networks that analyse electrocardiograms can be easily fooled, mistaking your normal heartbeat reading as irregular or vice versa, researchers warn in a paper published in Nature Medicine.

ECG sensors are becoming more widespread, embedded in wearable devices like smartwatches, while machine learning software is being increasingly developed to automatically monitor and process data to tell users about their heartbeats. The US Food and Drug Administration approved 23 algorithms for medical use in 2018 alone.

However, the technology isn’t foolproof. Like all deep learning models, ECG ones are susceptible to adversarial attacks: miscreants can force algorithms to misclassify the data by manipulating it with noise.

A group of researchers led by New York University demonstrated this by tampering with a deep convolutional neural network (CNN). First, they obtained a dataset containing 8,528 ECG recordings labelled into four groups: Normal, atrial fibrillation - the most common type of an irregular heartbeat - other, or noise.

The majority of the dataset, some 5,076 samples were considered normal, 758 fell into the atrial fibrillation category, 2,415 classified as other, and 279 as noise. The researchers split the dataset and used 90 per cent of it to train the CNN, and the other 10 per cent to test the system.

“Deep learning classifiers are susceptible to adversarial examples, which are created from raw data to fool the classifier such that it assigns the example to the wrong class, but which are undetectable to the human eye,” the researchers explained in the paper (Here's the free preprint version of the paper on arXiv.)

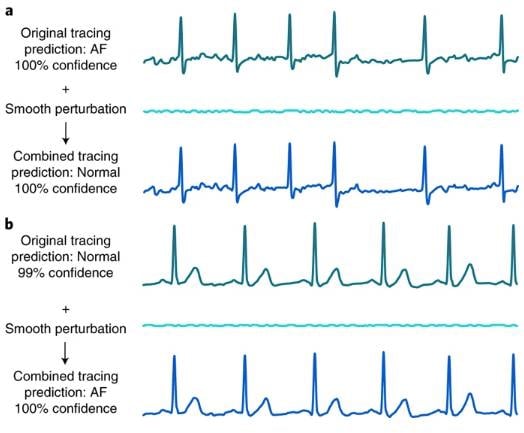

To create these adversarial examples, the researchers added a small amount of noise to samples used in the test set. The uniform peaks and troughs in ECG reading may appear innocuous and normal to the human eye, but adding a small interference was enough to trick the CNN into classifying them as atrial fibrillation - an irregular heartbeat linked to heart palpitations and an increased risk of strokes.

Here are two adversarial examples. The first one shows how an irregular atrial fibrillation (AF) reading being misclassified as normal. The second one is a normal reading misclassified as irregular. Image Credit: Tian et al. and Nature Medicine.

When the researchers fed the adversarial examples to the CNN, 74 per cent of the readings that were originally correctly classified were subsequently wrong. In other words, the model mistook 74 per cent of the readings by assigning them to incorrect labels. What was originally a normal reading then seemed irregular, and vice versa.

Our machine overlords can't be trusted

Luckily, humans are much more difficult to trick. Two clinicians were given pairs of readings - an original, unperturbed sample and its corresponding adversarial example and asked if either of them looked like they belonged to a different class. They only thought 1.4 per cent of the readings should have been labelled differently.

AI of the needle: Here's how neural networks could detect nighttime low blood-sugar levels using your heart beat

READ MOREThe heartbeat patterns in original and adversarial samples looked similar to the human eye, and, therefore, it’d be fairly easy to tell if a normal heartbeat had been incorrectly misclassified as irregular. In fact, both experts were able to tell the original reading from the adversarial one about 62 per cent of the time.

“The ability to create adversarial examples is an important issue, with future implications including robustness to the environmental noise of medical devices that rely on ECG interpretation - for example, pacemakers and defibrillators - the skewing of data to alter insurance claims and the introduction of intentional bias into clinical trial,” the paper said.

It’s unclear how realistic these adversarial attacks truly are in the real world, however. In these experiments, the researchers had full access to the model making it easy to attack but it’s much more difficult for these types of attacks to work on, say, someone’s Apple Watch, for example.

The Register has contacted the researchers for comment. But what the research does prove, however, is that relying solely on machines may be unreliable and that specialists really ought to double check results when neural networks are used in clinical settings.

“In conclusion, with this work, we do not intend to cast a shadow on the utility of deep learning for ECG analysis, which undoubtedly will be useful to handle the volumes of physiological signals requiring processing in the near future,” the researchers wrote.

“This work should, instead, serve as an additional reminder that machine learning systems deployed in the wild should be designed with safety and reliability in mind, with a particular focus on training data curation and provable guarantees on performance.” ®